Hi! I’m writing this article as a mini-HOWTO on how to setup a btrfs-raid1 volume on encrypted disks (luks). This page servers as my personal guide/documentation, althought you can use it with little intervention.

Disclaimer: Be very careful! This is a mini-HOWTO article, do not copy/paste commands. Modify them to fit your environment.

$ date -R

Thu, 03 Dec 2020 07:58:49 +0200

Prologue

I had to replace one of my existing data/media setup (btrfs-raid0) due to some random hardware errors in one of the disks. The existing disks are 7.1y WD 1TB and the new disks are WD Purple 4TB.

Western Digital Green 1TB, about 70€ each, SATA III (6 Gbit/s), 7200 RPM, 64 MB Cache

Western Digital Purple 4TB, about 100€ each, SATA III (6 Gbit/s), 5400 RPM, 64 MB CacheThis will give me about 3.64T (from 1.86T). I had concerns with the slower RPM but in the end of this article, you will see some related stats.

My primarly daily use is streaming media (video/audio/images) via minidlna instead of cifs/nfs (samba), although the service is still up & running.

Disks

It is important to use disks with the exact same size and speed. Usually for Raid 1 purposes, I prefer using the same model. One can argue that diversity of models and manufactures to reduce possible firmware issues of a specific series should be preferable. When working with Raid 1, the most important things to consider are:

- Geometry (size)

- RPM (speed)

and all the disks should have the same specs, otherwise size and speed will downgraded to the smaller and slower disk.

Identify Disks

the two (2) Western Digital Purple 4TB are manufacture model: WDC WD40PURZ

The system sees them as:

$ sudo find /sys/devices -type f -name model -exec cat {} +

WDC WD40PURZ-85A

WDC WD40PURZ-85T

try to identify them from the kernel with list block devices:

$ lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sdc 8:32 0 3.6T 0 disk

sde 8:64 0 3.6T 0 disk

verify it with hwinfo

$ hwinfo --short --disk

disk:

/dev/sde WDC WD40PURZ-85A

/dev/sdc WDC WD40PURZ-85T

$ hwinfo --block --short

/dev/sde WDC WD40PURZ-85A

/dev/sdc WDC WD40PURZ-85T

with list hardware:

$ sudo lshw -short | grep disk

/0/100/1f.5/0 /dev/sdc disk 4TB WDC WD40PURZ-85T

/0/100/1f.5/1 /dev/sde disk 4TB WDC WD40PURZ-85A

$ sudo lshw -class disk -json | jq -r .[].product

WDC WD40PURZ-85T

WDC WD40PURZ-85A

Luks

Create Random Encrypted keys

I prefer to use random generated keys for the disk encryption. This is also useful for automated scripts (encrypt/decrypt disks) instead of typing a pass phrase.

Create a folder to save the encrypted keys:

$ sudo mkdir -pv /etc/crypttab.keys/

create keys with dd against urandom:

WD40PURZ-85A

$ sudo dd if=/dev/urandom of=/etc/crypttab.keys/WD40PURZ-85A bs=4096 count=1

1+0 records in

1+0 records out

4096 bytes (4.1 kB, 4.0 KiB) copied, 0.00015914 s, 25.7 MB/s

WD40PURZ-85T

$ sudo dd if=/dev/urandom of=/etc/crypttab.keys/WD40PURZ-85T bs=4096 count=1

1+0 records in

1+0 records out

4096 bytes (4.1 kB, 4.0 KiB) copied, 0.000135452 s, 30.2 MB/s

verify two (2) 4k size random keys, exist on the above directory with list files:

$ sudo ls -l /etc/crypttab.keys/WD40PURZ-85*

-rw-r--r-- 1 root root 4096 Dec 3 08:00 /etc/crypttab.keys/WD40PURZ-85A

-rw-r--r-- 1 root root 4096 Dec 3 08:00 /etc/crypttab.keys/WD40PURZ-85T

Format & Encrypt Hard Disks

It is time to format and encrypt the hard disks with Luks

Be very careful, choose the correct disk, type uppercase YES to confirm.

$ sudo cryptsetup luksFormat /dev/sde --key-file /etc/crypttab.keys/WD40PURZ-85A

WARNING!

========

This will overwrite data on /dev/sde irrevocably.

Are you sure? (Type 'yes' in capital letters): YES

$ sudo cryptsetup luksFormat /dev/sdc --key-file /etc/crypttab.keys/WD40PURZ-85T

WARNING!

========

This will overwrite data on /dev/sdc irrevocably.

Are you sure? (Type 'yes' in capital letters): YES

Verify Encrypted Disks

print block device attributes:

$ sudo blkid | tail -2

/dev/sde: UUID="d5800c02-2840-4ba9-9177-4d8c35edffac" TYPE="crypto_LUKS"

/dev/sdc: UUID="2ffb6115-09fb-4385-a3c9-404df3a9d3bd" TYPE="crypto_LUKS"

Open and Decrypt

opening encrypted disks with luks

- WD40PURZ-85A

$ sudo cryptsetup luksOpen /dev/disk/by-uuid/d5800c02-2840-4ba9-9177-4d8c35edffac WD40PURZ-85A -d /etc/crypttab.keys/WD40PURZ-85A

- WD40PURZ-85T

$ sudo cryptsetup luksOpen /dev/disk/by-uuid/2ffb6115-09fb-4385-a3c9-404df3a9d3bd WD40PURZ-85T -d /etc/crypttab.keys/WD40PURZ-85T

Verify Status

- WD40PURZ-85A

$ sudo cryptsetup status /dev/mapper/WD40PURZ-85A

/dev/mapper/WD40PURZ-85A is active.

type: LUKS2

cipher: aes-xts-plain64

keysize: 512 bits

key location: keyring

device: /dev/sde

sector size: 512

offset: 32768 sectors

size: 7814004400 sectors

mode: read/write

- WD40PURZ-85T

$ sudo cryptsetup status /dev/mapper/WD40PURZ-85T

/dev/mapper/WD40PURZ-85T is active.

type: LUKS2

cipher: aes-xts-plain64

keysize: 512 bits

key location: keyring

device: /dev/sdc

sector size: 512

offset: 32768 sectors

size: 7814004400 sectors

mode: read/write

BTRFS

Current disks

$sudo btrfs device stats /mnt/data/

[/dev/mapper/western1T].write_io_errs 28632

[/dev/mapper/western1T].read_io_errs 916948985

[/dev/mapper/western1T].flush_io_errs 0

[/dev/mapper/western1T].corruption_errs 0

[/dev/mapper/western1T].generation_errs 0

[/dev/mapper/western1Tb].write_io_errs 0

[/dev/mapper/western1Tb].read_io_errs 0

[/dev/mapper/western1Tb].flush_io_errs 0

[/dev/mapper/western1Tb].corruption_errs 0

[/dev/mapper/western1Tb].generation_errs 0

There are a lot of write/read errors :(

btrfs version

$ sudo btrfs --version

btrfs-progs v5.9

$ sudo mkfs.btrfs --version

mkfs.btrfs, part of btrfs-progs v5.9

Create BTRFS Raid 1 Filesystem

by using mkfs, selecting a disk label, choosing raid1 metadata and data to be on both disks (mirror):

$ sudo mkfs.btrfs

-L WD40PURZ

-m raid1

-d raid1

/dev/mapper/WD40PURZ-85A

/dev/mapper/WD40PURZ-85T

or in one-liner (as-root):

mkfs.btrfs -L WD40PURZ -m raid1 -d raid1 /dev/mapper/WD40PURZ-85A /dev/mapper/WD40PURZ-85T

format output

btrfs-progs v5.9

See http://btrfs.wiki.kernel.org for more information.

Label: WD40PURZ

UUID: 095d3b5c-58dc-4893-a79a-98d56a84d75d

Node size: 16384

Sector size: 4096

Filesystem size: 7.28TiB

Block group profiles:

Data: RAID1 1.00GiB

Metadata: RAID1 1.00GiB

System: RAID1 8.00MiB

SSD detected: no

Incompat features: extref, skinny-metadata

Runtime features:

Checksum: crc32c

Number of devices: 2

Devices:

ID SIZE PATH

1 3.64TiB /dev/mapper/WD40PURZ-85A

2 3.64TiB /dev/mapper/WD40PURZ-85T

Notice that both disks have the same UUID (Universal Unique IDentifier) number:

UUID: 095d3b5c-58dc-4893-a79a-98d56a84d75dVerify block device

$ blkid | tail -2

/dev/mapper/WD40PURZ-85A: LABEL="WD40PURZ" UUID="095d3b5c-58dc-4893-a79a-98d56a84d75d" UUID_SUB="75c9e028-2793-4e74-9301-2b443d922c40" BLOCK_SIZE="4096" TYPE="btrfs"

/dev/mapper/WD40PURZ-85T: LABEL="WD40PURZ" UUID="095d3b5c-58dc-4893-a79a-98d56a84d75d" UUID_SUB="2ee4ec50-f221-44a7-aeac-aa75de8cdd86" BLOCK_SIZE="4096" TYPE="btrfs"once more, be aware of the same UUID: 095d3b5c-58dc-4893-a79a-98d56a84d75d on both disks!

Mount new block disk

create a new mount point

$ sudo mkdir -pv /mnt/WD40PURZ

mkdir: created directory '/mnt/WD40PURZ'

append the below entry in /etc/fstab (as-root)

echo 'UUID=095d3b5c-58dc-4893-a79a-98d56a84d75d /mnt/WD40PURZ auto defaults,noauto,user,exec 0 0' >> /etc/fstab

and finally, mount it!

$ sudo mount /mnt/WD40PURZ

$ mount | grep WD

/dev/mapper/WD40PURZ-85A on /mnt/WD40PURZ type btrfs (rw,nosuid,nodev,relatime,space_cache,subvolid=5,subvol=/)

Disk Usage

check disk usage and free space for the new encrypted mount point

$ df -h /mnt/WD40PURZ/

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/WD40PURZ-85A 3.7T 3.4M 3.7T 1% /mnt/WD40PURZ

btrfs filesystem disk usage

$ btrfs filesystem df /mnt/WD40PURZ | column -t

Data, RAID1: total=1.00GiB, used=512.00KiB

System, RAID1: total=8.00MiB, used=16.00KiB

Metadata, RAID1: total=1.00GiB, used=112.00KiB

GlobalReserve, single: total=3.25MiB, used=0.00B

btrfs filesystem show

$ sudo btrfs filesystem show /mnt/WD40PURZ

Label: 'WD40PURZ' uuid: 095d3b5c-58dc-4893-a79a-98d56a84d75d

Total devices 2 FS bytes used 640.00KiB

devid 1 size 3.64TiB used 2.01GiB path /dev/mapper/WD40PURZ-85A

devid 2 size 3.64TiB used 2.01GiB path /dev/mapper/WD40PURZ-85T

stats

$ sudo btrfs device stats /mnt/WD40PURZ/

[/dev/mapper/WD40PURZ-85A].write_io_errs 0

[/dev/mapper/WD40PURZ-85A].read_io_errs 0

[/dev/mapper/WD40PURZ-85A].flush_io_errs 0

[/dev/mapper/WD40PURZ-85A].corruption_errs 0

[/dev/mapper/WD40PURZ-85A].generation_errs 0

[/dev/mapper/WD40PURZ-85T].write_io_errs 0

[/dev/mapper/WD40PURZ-85T].read_io_errs 0

[/dev/mapper/WD40PURZ-85T].flush_io_errs 0

[/dev/mapper/WD40PURZ-85T].corruption_errs 0

[/dev/mapper/WD40PURZ-85T].generation_errs 0

btrfs fi disk usage

btrfs filesystem disk usage

$ sudo btrfs filesystem usage /mnt/WD40PURZ

Overall:

Device size: 7.28TiB

Device allocated: 4.02GiB

Device unallocated: 7.27TiB

Device missing: 0.00B

Used: 1.25MiB

Free (estimated): 3.64TiB (min: 3.64TiB)

Data ratio: 2.00

Metadata ratio: 2.00

Global reserve: 3.25MiB (used: 0.00B)

Multiple profiles: no

Data,RAID1: Size:1.00GiB, Used:512.00KiB (0.05%)

/dev/mapper/WD40PURZ-85A 1.00GiB

/dev/mapper/WD40PURZ-85T 1.00GiB

Metadata,RAID1: Size:1.00GiB, Used:112.00KiB (0.01%)

/dev/mapper/WD40PURZ-85A 1.00GiB

/dev/mapper/WD40PURZ-85T 1.00GiB

System,RAID1: Size:8.00MiB, Used:16.00KiB (0.20%)

/dev/mapper/WD40PURZ-85A 8.00MiB

/dev/mapper/WD40PURZ-85T 8.00MiB

Unallocated:

/dev/mapper/WD40PURZ-85A 3.64TiB

/dev/mapper/WD40PURZ-85T 3.64TiBSpeed

Using hdparm to test/get some speed stats

$ sudo hdparm -tT /dev/sde

/dev/sde:

Timing cached reads: 25224 MB in 1.99 seconds = 12662.08 MB/sec

Timing buffered disk reads: 544 MB in 3.01 seconds = 181.02 MB/sec

$ sudo hdparm -tT /dev/sdc

/dev/sdc:

Timing cached reads: 24852 MB in 1.99 seconds = 12474.20 MB/sec

Timing buffered disk reads: 534 MB in 3.00 seconds = 177.85 MB/sec

$ sudo hdparm -tT /dev/disk/by-uuid/095d3b5c-58dc-4893-a79a-98d56a84d75d

/dev/disk/by-uuid/095d3b5c-58dc-4893-a79a-98d56a84d75d:

Timing cached reads: 25058 MB in 1.99 seconds = 12577.91 MB/sec

HDIO_DRIVE_CMD(identify) failed: Inappropriate ioctl for device

Timing buffered disk reads: 530 MB in 3.00 seconds = 176.56 MB/sec

These are the new disks with 5400 rpm, let’s see what the old 7200 rpm disk shows here:

/dev/sdb:

Timing cached reads: 26052 MB in 1.99 seconds = 13077.22 MB/sec

Timing buffered disk reads: 446 MB in 3.01 seconds = 148.40 MB/sec

/dev/sdd:

Timing cached reads: 25602 MB in 1.99 seconds = 12851.19 MB/sec

Timing buffered disk reads: 420 MB in 3.01 seconds = 139.69 MB/sec

So even that these new disks are 5400 seems to be faster than the old ones !!

Also, I have mounted as read-only the problematic Raid-0 setup.

Rsync

I am now moving some data to measure time

- Folder-A

du -sh /mnt/data/Folder-A/

795G /mnt/data/Folder-A/

time rsync -P -rax /mnt/data/Folder-A/ Folder-A/

sending incremental file list

created directory Folder-A

./

...

real 163m27.531s

user 8m35.252s

sys 20m56.649s- Folder-B

du -sh /mnt/data/Folder-B/

464G /mnt/data/Folder-B/time rsync -P -rax /mnt/data/Folder-B/ Folder-B/

sending incremental file list

created directory Folder-B

./

...

real 102m1.808s

user 7m30.923s

sys 18m24.981s

Control and Monitor Utility for SMART Disks

Last but not least, some smart info with smartmontools

$sudo smartctl -t short /dev/sdc

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.79-1-lts] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF OFFLINE IMMEDIATE AND SELF-TEST SECTION ===

Sending command: "Execute SMART Short self-test routine immediately in off-line mode".

Drive command "Execute SMART Short self-test routine immediately in off-line mode" successful.

Testing has begun.

Please wait 2 minutes for test to complete.

Test will complete after Thu Dec 3 08:58:06 2020 EET

Use smartctl -X to abort test.

result :

$sudo smartctl -l selftest /dev/sdc

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.79-1-lts] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Short offline Completed without error 00% 1 -details

$sudo smartctl -A /dev/sdc

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.79-1-lts] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x002f 100 253 051 Pre-fail Always - 0

3 Spin_Up_Time 0x0027 100 253 021 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 000 Old_age Always - 1

5 Reallocated_Sector_Ct 0x0033 200 200 140 Pre-fail Always - 0

7 Seek_Error_Rate 0x002e 100 253 000 Old_age Always - 0

9 Power_On_Hours 0x0032 100 100 000 Old_age Always - 1

10 Spin_Retry_Count 0x0032 100 253 000 Old_age Always - 0

11 Calibration_Retry_Count 0x0032 100 253 000 Old_age Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 1

192 Power-Off_Retract_Count 0x0032 200 200 000 Old_age Always - 0

193 Load_Cycle_Count 0x0032 200 200 000 Old_age Always - 1

194 Temperature_Celsius 0x0022 119 119 000 Old_age Always - 31

196 Reallocated_Event_Count 0x0032 200 200 000 Old_age Always - 0

197 Current_Pending_Sector 0x0032 200 200 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 253 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 200 200 000 Old_age Always - 0

200 Multi_Zone_Error_Rate 0x0008 100 253 000 Old_age Offline - 0Second disk

$sudo smartctl -t short /dev/sde

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.79-1-lts] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF OFFLINE IMMEDIATE AND SELF-TEST SECTION ===

Sending command: "Execute SMART Short self-test routine immediately in off-line mode".

Drive command "Execute SMART Short self-test routine immediately in off-line mode" successful.

Testing has begun.

Please wait 2 minutes for test to complete.

Test will complete after Thu Dec 3 09:00:56 2020 EET

Use smartctl -X to abort test.selftest results

$sudo smartctl -l selftest /dev/sde

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.79-1-lts] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Self-test log structure revision number 1

Num Test_Description Status Remaining LifeTime(hours) LBA_of_first_error

# 1 Short offline Completed without error 00% 1 -

details

$sudo smartctl -A /dev/sde

smartctl 7.1 2019-12-30 r5022 [x86_64-linux-5.4.79-1-lts] (local build)

Copyright (C) 2002-19, Bruce Allen, Christian Franke, www.smartmontools.org

=== START OF READ SMART DATA SECTION ===

SMART Attributes Data Structure revision number: 16

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE

1 Raw_Read_Error_Rate 0x002f 100 253 051 Pre-fail Always - 0

3 Spin_Up_Time 0x0027 100 253 021 Pre-fail Always - 0

4 Start_Stop_Count 0x0032 100 100 000 Old_age Always - 1

5 Reallocated_Sector_Ct 0x0033 200 200 140 Pre-fail Always - 0

7 Seek_Error_Rate 0x002e 100 253 000 Old_age Always - 0

9 Power_On_Hours 0x0032 100 100 000 Old_age Always - 1

10 Spin_Retry_Count 0x0032 100 253 000 Old_age Always - 0

11 Calibration_Retry_Count 0x0032 100 253 000 Old_age Always - 0

12 Power_Cycle_Count 0x0032 100 100 000 Old_age Always - 1

192 Power-Off_Retract_Count 0x0032 200 200 000 Old_age Always - 0

193 Load_Cycle_Count 0x0032 200 200 000 Old_age Always - 1

194 Temperature_Celsius 0x0022 116 116 000 Old_age Always - 34

196 Reallocated_Event_Count 0x0032 200 200 000 Old_age Always - 0

197 Current_Pending_Sector 0x0032 200 200 000 Old_age Always - 0

198 Offline_Uncorrectable 0x0030 100 253 000 Old_age Offline - 0

199 UDMA_CRC_Error_Count 0x0032 200 200 000 Old_age Always - 0

200 Multi_Zone_Error_Rate 0x0008 100 253 000 Old_age Offline - 0that’s it !

-ebal

many thanks to erethon for his help & support on this article.

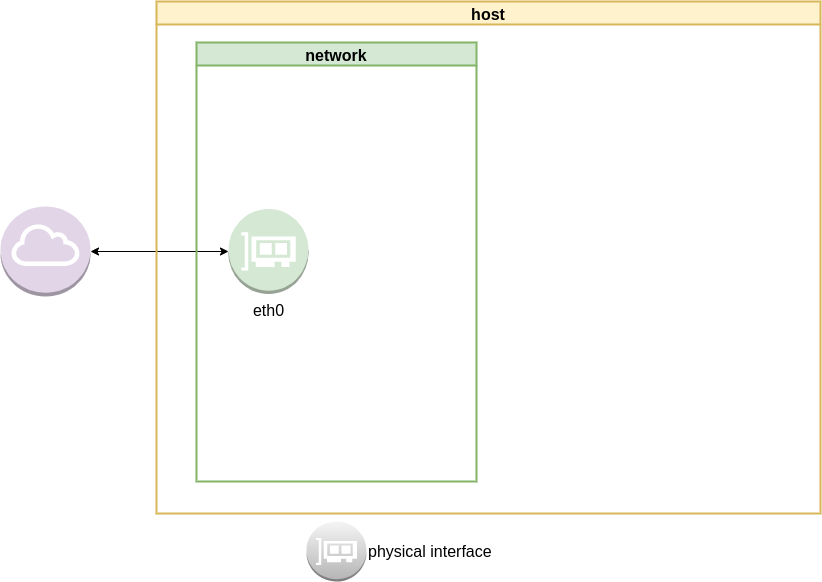

Working on your home lab, it is quiet often that you need to spawn containers or virtual machines to test or develop something. I was doing this kind of testing with public cloud providers with minimal VMs and for short time of periods to reduce any costs. In this article I will try to explain how to use libvirt -that means kvm- with terraform and provide a simple way to run this on your linux machine.

Be aware this will be a (long) technical article and some experience is needed with kvm/libvirt & terraform but I will try to keep it simple so you can follow the instructions.

Terraform

Install Terraform v0.13 either from your distro or directly from hashicopr’s site.

$ terraform version

Terraform v0.13.2

Libvirt

same thing for libvirt

$ libvirtd --version

libvirtd (libvirt) 6.5.0

$ sudo systemctl is-active libvirtd

active

verify that you have access to libvirt

$ virsh -c qemu:///system version

Compiled against library: libvirt 6.5.0

Using library: libvirt 6.5.0

Using API: QEMU 6.5.0

Running hypervisor: QEMU 5.1.0

Terraform Libvirt Provider

To access the libvirt daemon via terraform, we need the terraform-libvirt provider.

Terraform provider to provision infrastructure with Linux’s KVM using libvirt

The official repo is on GitHub - dmacvicar/terraform-provider-libvirt and you can download a precompiled version for your distro from the repo, or you can download a generic version from my gitlab repo

ebal / terraform-provider-libvirt · GitLab

These are my instructions

mkdir -pv ~/.local/share/terraform/plugins/registry.terraform.io/dmacvicar/libvirt/0.6.2/linux_amd64/

curl -sLo ~/.local/share/terraform/plugins/registry.terraform.io/dmacvicar/libvirt/0.6.2/linux_amd64/terraform-provider-libvirt https://gitlab.com/terraform-provider/terraform-provider-libvirt/-/jobs/artifacts/master/raw/terraform-provider-libvirt/terraform-provider-libvirt?job=run-build

chmod +x ~/.local/share/terraform/plugins/registry.terraform.io/dmacvicar/libvirt/0.6.2/linux_amd64/terraform-provider-libvirt

Terraform Init

Let’s create a new directory and test that everything is fine.

mkdir -pv tf_libvirt

cd !$

cat > Provider.tf <<EOF

terraform {

required_version = ">= 0.13"

required_providers {

libvirt = {

source = "dmacvicar/libvirt"

version = "0.6.2"

}

}

}

EOF

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding dmacvicar/libvirt versions matching "0.6.2"...

- Installing dmacvicar/libvirt v0.6.2...

- Installed dmacvicar/libvirt v0.6.2 (unauthenticated)

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

everything seems okay!

We can verify with tree or find

$ tree -a

.

├── Provider.tf

└── .terraform

└── plugins

├── registry.terraform.io

│ └── dmacvicar

│ └── libvirt

│ └── 0.6.2

│ └── linux_amd64 -> /home/ebal/.local/share/terraform/plugins/registry.terraform.io/dmacvicar/libvirt/0.6.2/linux_amd64

└── selections.json

7 directories, 2 files

Provider

but did we actually connect to libvirtd via terraform ?

Short answer: No.

We just told terraform to use this specific provider.

How to connect ?

We need to add the connection libvirt uri to the provider section:

provider "libvirt" {

uri = "qemu:///system"

}so our Provider.tf looks like this

terraform {

required_version = ">= 0.13"

required_providers {

libvirt = {

source = "dmacvicar/libvirt"

version = "0.6.2"

}

}

}

provider "libvirt" {

uri = "qemu:///system"

}

Libvirt URI

libvirt is a virtualization api/toolkit that supports multiple drivers and thus you can use libvirt against the below virtualization platforms

- LXC - Linux Containers

- OpenVZ

- QEMU

- VirtualBox

- VMware ESX

- VMware Workstation/Player

- Xen

- Microsoft Hyper-V

- Virtuozzo

- Bhyve - The BSD Hypervisor

Libvirt also supports multiple authentication mechanisms like ssh

virsh -c qemu+ssh://username@host1.example.org/systemso it is really important to properly define the libvirt URI in terraform!

In this article, I will limit it to a local libvirt daemon, but keep in mind you can use a remote libvirt daemon as well.

Volume

Next thing, we need a disk volume!

Volume.tf

resource "libvirt_volume" "ubuntu-2004-vol" {

name = "ubuntu-2004-vol"

pool = "default"

#source = "https://cloud-images.ubuntu.com/focal/current/focal-server-cloudimg-amd64-disk-kvm.img"

source = "ubuntu-20.04.img"

format = "qcow2"

}

I have already downloaded this image and verified its checksum, I will use this local image as the base image for my VM’s volume.

By running terraform plan we will see this output:

# libvirt_volume.ubuntu-2004-vol will be created

+ resource "libvirt_volume" "ubuntu-2004-vol" {

+ format = "qcow2"

+ id = (known after apply)

+ name = "ubuntu-2004-vol"

+ pool = "default"

+ size = (known after apply)

+ source = "ubuntu-20.04.img"

}What we expect is to use this source image and create a new disk volume (copy) and put it to the default disk storage libvirt pool.

Let’s try to figure out what is happening here:

terraform plan -out terraform.out

terraform apply terraform.out

terraform show# libvirt_volume.ubuntu-2004-vol:

resource "libvirt_volume" "ubuntu-2004-vol" {

format = "qcow2"

id = "/var/lib/libvirt/images/ubuntu-2004-vol"

name = "ubuntu-2004-vol"

pool = "default"

size = 2361393152

source = "ubuntu-20.04.img"

}

and

$ virsh -c qemu:///system vol-list default

Name Path

------------------------------------------------------------

ubuntu-2004-vol /var/lib/libvirt/images/ubuntu-2004-volVolume Size

BE Aware: by this declaration, the produced disk volume image will have the same size as the original source. In this case ~2G of disk.

We will show later in this article how to expand to something larger.

destroy volume

$ terraform destroy

libvirt_volume.ubuntu-2004-vol: Refreshing state... [id=/var/lib/libvirt/images/ubuntu-2004-vol]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# libvirt_volume.ubuntu-2004-vol will be destroyed

- resource "libvirt_volume" "ubuntu-2004-vol" {

- format = "qcow2" -> null

- id = "/var/lib/libvirt/images/ubuntu-2004-vol" -> null

- name = "ubuntu-2004-vol" -> null

- pool = "default" -> null

- size = 2361393152 -> null

- source = "ubuntu-20.04.img" -> null

}

Plan: 0 to add, 0 to change, 1 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

libvirt_volume.ubuntu-2004-vol: Destroying... [id=/var/lib/libvirt/images/ubuntu-2004-vol]

libvirt_volume.ubuntu-2004-vol: Destruction complete after 0s

Destroy complete! Resources: 1 destroyed.

verify

$ virsh -c qemu:///system vol-list default

Name Path

----------------------------------------------------------

reminder: always destroy!

Domain

Believe it or not, we are half way from our first VM!

We need to create a libvirt domain resource.

Domain.tf

cat > Domain.tf <<EOF

resource "libvirt_domain" "ubuntu-2004-vm" {

name = "ubuntu-2004-vm"

memory = "2048"

vcpu = 1

disk {

volume_id = libvirt_volume.ubuntu-2004-vol.id

}

}

EOFApply the new tf plan

terraform plan -out terraform.out

terraform apply terraform.out

$ terraform show

# libvirt_domain.ubuntu-2004-vm:

resource "libvirt_domain" "ubuntu-2004-vm" {

arch = "x86_64"

autostart = false

disk = [

{

block_device = ""

file = ""

scsi = false

url = ""

volume_id = "/var/lib/libvirt/images/ubuntu-2004-vol"

wwn = ""

},

]

emulator = "/usr/bin/qemu-system-x86_64"

fw_cfg_name = "opt/com.coreos/config"

id = "3a4a2b44-5ecd-433c-8645-9bccc831984f"

machine = "pc"

memory = 2048

name = "ubuntu-2004-vm"

qemu_agent = false

running = true

vcpu = 1

}

# libvirt_volume.ubuntu-2004-vol:

resource "libvirt_volume" "ubuntu-2004-vol" {

format = "qcow2"

id = "/var/lib/libvirt/images/ubuntu-2004-vol"

name = "ubuntu-2004-vol"

pool = "default"

size = 2361393152

source = "ubuntu-20.04.img"

}

Verify via virsh:

$ virsh -c qemu:///system list

Id Name State

--------------------------------

3 ubuntu-2004-vm running

Destroy them!

$ terraform destroy

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

libvirt_domain.ubuntu-2004-vm: Destroying... [id=3a4a2b44-5ecd-433c-8645-9bccc831984f]

libvirt_domain.ubuntu-2004-vm: Destruction complete after 0s

libvirt_volume.ubuntu-2004-vol: Destroying... [id=/var/lib/libvirt/images/ubuntu-2004-vol]

libvirt_volume.ubuntu-2004-vol: Destruction complete after 0s

Destroy complete! Resources: 2 destroyed.

That’s it !

We have successfully created a new VM from a source image that runs on our libvirt environment.

But we can not connect/use or do anything with this instance. Not yet, we need to add a few more things. Like a network interface, a console output and a default cloud-init file to auto-configure the virtual machine.

Variables

Before continuing with the user-data (cloud-init), it is a good time to set up some terraform variables.

cat > Variables.tf <<EOF

variable "domain" {

description = "The domain/host name of the zone"

default = "ubuntu2004"

}

EOF

We are going to use this variable within the user-date yaml file.

Cloud-init

The best way to configure a newly created virtual machine, is via cloud-init and the ability of injecting a user-data.yml file into the virtual machine via terraform-libvirt.

user-data

#cloud-config

#disable_root: true

disable_root: false

chpasswd:

list: |

root:ping

expire: False

# Set TimeZone

timezone: Europe/Athens

hostname: "${hostname}"

# PostInstall

runcmd:

# Remove cloud-init

- apt-get -qqy autoremove --purge cloud-init lxc lxd snapd

- apt-get -y --purge autoremove

- apt -y autoclean

- apt -y clean all

cloud init disk

Terraform will create a new iso by reading the above template file and generate a proper user-data.yaml file. To use this cloud init iso, we need to configure it as a libvirt cloud-init resource.

Cloudinit.tf

data "template_file" "user_data" {

template = file("user-data.yml")

vars = {

hostname = var.domain

}

}

resource "libvirt_cloudinit_disk" "cloud-init" {

name = "cloud-init.iso"

user_data = data.template_file.user_data.rendered

}

and we need to modify our Domain.tf accordingly

cloudinit = libvirt_cloudinit_disk.cloud-init.idTerraform will create and upload this iso disk image into the default libvirt storage pool. And attach it to the virtual machine in the boot process.

At this moment the tf_libvirt directory should look like this:

$ ls -1

Cloudinit.tf

Domain.tf

Provider.tf

ubuntu-20.04.img

user-data.yml

Variables.tf

Volume.tf

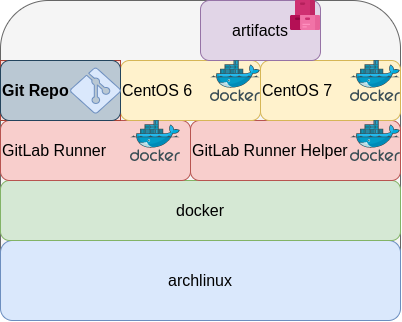

To give you an idea, the abstract design is this:

apply

terraform plan -out terraform.out

terraform apply terraform.out

$ terraform show

# data.template_file.user_data:

data "template_file" "user_data" {

id = "cc82a7db4c6498aee21a883729fc4be7b84059d3dec69b92a210e046c67a9a00"

rendered = <<~EOT

#cloud-config

#disable_root: true

disable_root: false

chpasswd:

list: |

root:ping

expire: False

# Set TimeZone

timezone: Europe/Athens

hostname: "ubuntu2004"

# PostInstall

runcmd:

# Remove cloud-init

- apt-get -qqy autoremove --purge cloud-init lxc lxd snapd

- apt-get -y --purge autoremove

- apt -y autoclean

- apt -y clean all

EOT

template = <<~EOT

#cloud-config

#disable_root: true

disable_root: false

chpasswd:

list: |

root:ping

expire: False

# Set TimeZone

timezone: Europe/Athens

hostname: "${hostname}"

# PostInstall

runcmd:

# Remove cloud-init

- apt-get -qqy autoremove --purge cloud-init lxc lxd snapd

- apt-get -y --purge autoremove

- apt -y autoclean

- apt -y clean all

EOT

vars = {

"hostname" = "ubuntu2004"

}

}

# libvirt_cloudinit_disk.cloud-init:

resource "libvirt_cloudinit_disk" "cloud-init" {

id = "/var/lib/libvirt/images/cloud-init.iso;5f5cdc31-1d38-39cb-cc72-971e474ca539"

name = "cloud-init.iso"

pool = "default"

user_data = <<~EOT

#cloud-config

#disable_root: true

disable_root: false

chpasswd:

list: |

root:ping

expire: False

# Set TimeZone

timezone: Europe/Athens

hostname: "ubuntu2004"

# PostInstall

runcmd:

# Remove cloud-init

- apt-get -qqy autoremove --purge cloud-init lxc lxd snapd

- apt-get -y --purge autoremove

- apt -y autoclean

- apt -y clean all

EOT

}

# libvirt_domain.ubuntu-2004-vm:

resource "libvirt_domain" "ubuntu-2004-vm" {

arch = "x86_64"

autostart = false

cloudinit = "/var/lib/libvirt/images/cloud-init.iso;5f5ce077-9508-3b8c-273d-02d44443b79c"

disk = [

{

block_device = ""

file = ""

scsi = false

url = ""

volume_id = "/var/lib/libvirt/images/ubuntu-2004-vol"

wwn = ""

},

]

emulator = "/usr/bin/qemu-system-x86_64"

fw_cfg_name = "opt/com.coreos/config"

id = "3ade5c95-30d4-496b-9bcf-a12d63993cfa"

machine = "pc"

memory = 2048

name = "ubuntu-2004-vm"

qemu_agent = false

running = true

vcpu = 1

}

# libvirt_volume.ubuntu-2004-vol:

resource "libvirt_volume" "ubuntu-2004-vol" {

format = "qcow2"

id = "/var/lib/libvirt/images/ubuntu-2004-vol"

name = "ubuntu-2004-vol"

pool = "default"

size = 2361393152

source = "ubuntu-20.04.img"

}

Lots of output , but let me explain it really quick:

generate a user-data file from template, template is populated with variables, create an cloud-init iso, create a volume disk from source, create a virtual machine with this new volume disk and boot it with this cloud-init iso.

Pretty much, that’s it!!!

$ virsh -c qemu:///system vol-list --details default

Name Path Type Capacity Allocation

---------------------------------------------------------------------------------------------

cloud-init.iso /var/lib/libvirt/images/cloud-init.iso file 364.00 KiB 364.00 KiB

ubuntu-2004-vol /var/lib/libvirt/images/ubuntu-2004-vol file 2.20 GiB 537.94 MiB

$ virsh -c qemu:///system list

Id Name State

--------------------------------

1 ubuntu-2004-vm running

destroy

$ terraform destroy -auto-approve

data.template_file.user_data: Refreshing state... [id=cc82a7db4c6498aee21a883729fc4be7b84059d3dec69b92a210e046c67a9a00]

libvirt_volume.ubuntu-2004-vol: Refreshing state... [id=/var/lib/libvirt/images/ubuntu-2004-vol]

libvirt_cloudinit_disk.cloud-init: Refreshing state... [id=/var/lib/libvirt/images/cloud-init.iso;5f5cdc31-1d38-39cb-cc72-971e474ca539]

libvirt_domain.ubuntu-2004-vm: Refreshing state... [id=3ade5c95-30d4-496b-9bcf-a12d63993cfa]

libvirt_cloudinit_disk.cloud-init: Destroying... [id=/var/lib/libvirt/images/cloud-init.iso;5f5cdc31-1d38-39cb-cc72-971e474ca539]

libvirt_domain.ubuntu-2004-vm: Destroying... [id=3ade5c95-30d4-496b-9bcf-a12d63993cfa]

libvirt_cloudinit_disk.cloud-init: Destruction complete after 0s

libvirt_domain.ubuntu-2004-vm: Destruction complete after 0s

libvirt_volume.ubuntu-2004-vol: Destroying... [id=/var/lib/libvirt/images/ubuntu-2004-vol]

libvirt_volume.ubuntu-2004-vol: Destruction complete after 0s

Destroy complete! Resources: 3 destroyed.

Most important detail is:

Resources: 3 destroyed.

- cloud-init.iso

- ubuntu-2004-vol

- ubuntu-2004-vm

Console

but there are a few things still missing.

To add a console for starters so we can connect into this virtual machine!

To do that, we need to re-edit Domain.tf and add a console output:

console {

target_type = "serial"

type = "pty"

target_port = "0"

}

console {

target_type = "virtio"

type = "pty"

target_port = "1"

}

the full file should look like:

resource "libvirt_domain" "ubuntu-2004-vm" {

name = "ubuntu-2004-vm"

memory = "2048"

vcpu = 1

cloudinit = libvirt_cloudinit_disk.cloud-init.id

disk {

volume_id = libvirt_volume.ubuntu-2004-vol.id

}

console {

target_type = "serial"

type = "pty"

target_port = "0"

}

console {

target_type = "virtio"

type = "pty"

target_port = "1"

}

}

Create again the VM with

terraform plan -out terraform.out

terraform apply terraform.out

And test the console:

$ virsh -c qemu:///system console ubuntu-2004-vm

Connected to domain ubuntu-2004-vm

Escape character is ^] (Ctrl + ])

wow!

We have actually logged-in to this VM using the libvirt console!

Virtual Machine

some interesting details

root@ubuntu2004:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/root 2.0G 916M 1.1G 46% /

devtmpfs 998M 0 998M 0% /dev

tmpfs 999M 0 999M 0% /dev/shm

tmpfs 200M 392K 200M 1% /run

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 999M 0 999M 0% /sys/fs/cgroup

/dev/vda15 105M 3.9M 101M 4% /boot/efi

tmpfs 200M 0 200M 0% /run/user/0

root@ubuntu2004:~# free -hm

total used free shared buff/cache available

Mem: 2.0Gi 73Mi 1.7Gi 0.0Ki 140Mi 1.8Gi

Swap: 0B 0B 0B

root@ubuntu2004:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

3: sit0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/sit 0.0.0.0 brd 0.0.0.0

Do not forget to destroy

$ terraform destroy -auto-approve

data.template_file.user_data: Refreshing state... [id=cc82a7db4c6498aee21a883729fc4be7b84059d3dec69b92a210e046c67a9a00]

libvirt_volume.ubuntu-2004-vol: Refreshing state... [id=/var/lib/libvirt/images/ubuntu-2004-vol]

libvirt_cloudinit_disk.cloud-init: Refreshing state... [id=/var/lib/libvirt/images/cloud-init.iso;5f5ce077-9508-3b8c-273d-02d44443b79c]

libvirt_domain.ubuntu-2004-vm: Refreshing state... [id=69f75b08-1e06-409d-9fd6-f45d82260b51]

libvirt_domain.ubuntu-2004-vm: Destroying... [id=69f75b08-1e06-409d-9fd6-f45d82260b51]

libvirt_domain.ubuntu-2004-vm: Destruction complete after 0s

libvirt_cloudinit_disk.cloud-init: Destroying... [id=/var/lib/libvirt/images/cloud-init.iso;5f5ce077-9508-3b8c-273d-02d44443b79c]

libvirt_volume.ubuntu-2004-vol: Destroying... [id=/var/lib/libvirt/images/ubuntu-2004-vol]

libvirt_cloudinit_disk.cloud-init: Destruction complete after 0s

libvirt_volume.ubuntu-2004-vol: Destruction complete after 0s

Destroy complete! Resources: 3 destroyed.

extend the volume disk

As mentioned above, the volume’s disk size is exactly as the origin source image.

In our case it’s 2G.

What we need to do, is to use the source image as a base for a new volume disk. In our new volume disk, we can declare the size we need.

I would like to make this a user variable: Variables.tf

variable "vol_size" {

description = "The mac & iP address for this VM"

# 10G

default = 10 * 1024 * 1024 * 1024

}

Arithmetic in terraform!!

So the Volume.tf should be:

resource "libvirt_volume" "ubuntu-2004-base" {

name = "ubuntu-2004-base"

pool = "default"

#source = "https://cloud-images.ubuntu.com/focal/current/focal-server-cloudimg-amd64-disk-kvm.img"

source = "ubuntu-20.04.img"

format = "qcow2"

}

resource "libvirt_volume" "ubuntu-2004-vol" {

name = "ubuntu-2004-vol"

pool = "default"

base_volume_id = libvirt_volume.ubuntu-2004-base.id

size = var.vol_size

}

base image –> volume image

test it

terraform plan -out terraform.out

terraform apply terraform.out

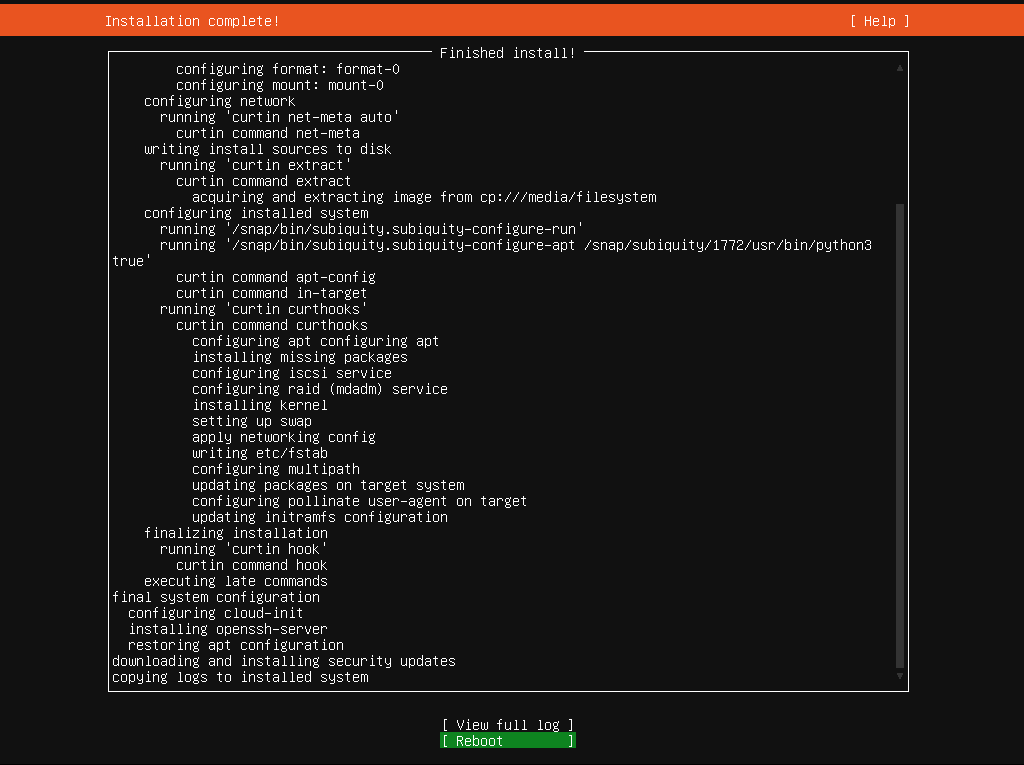

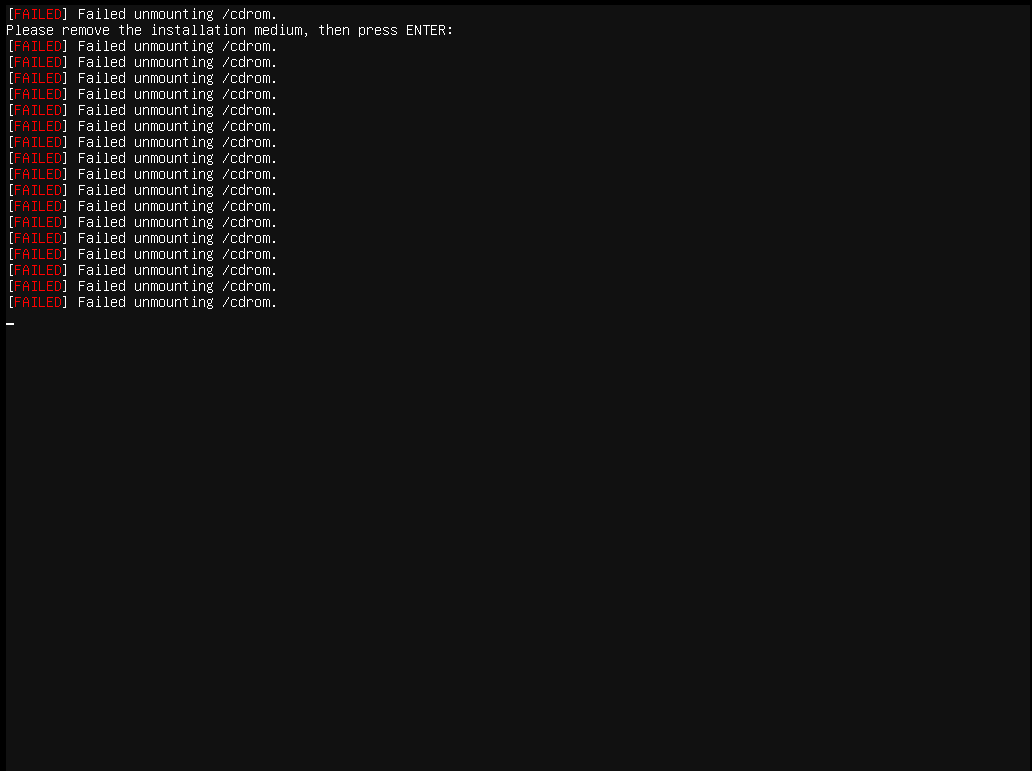

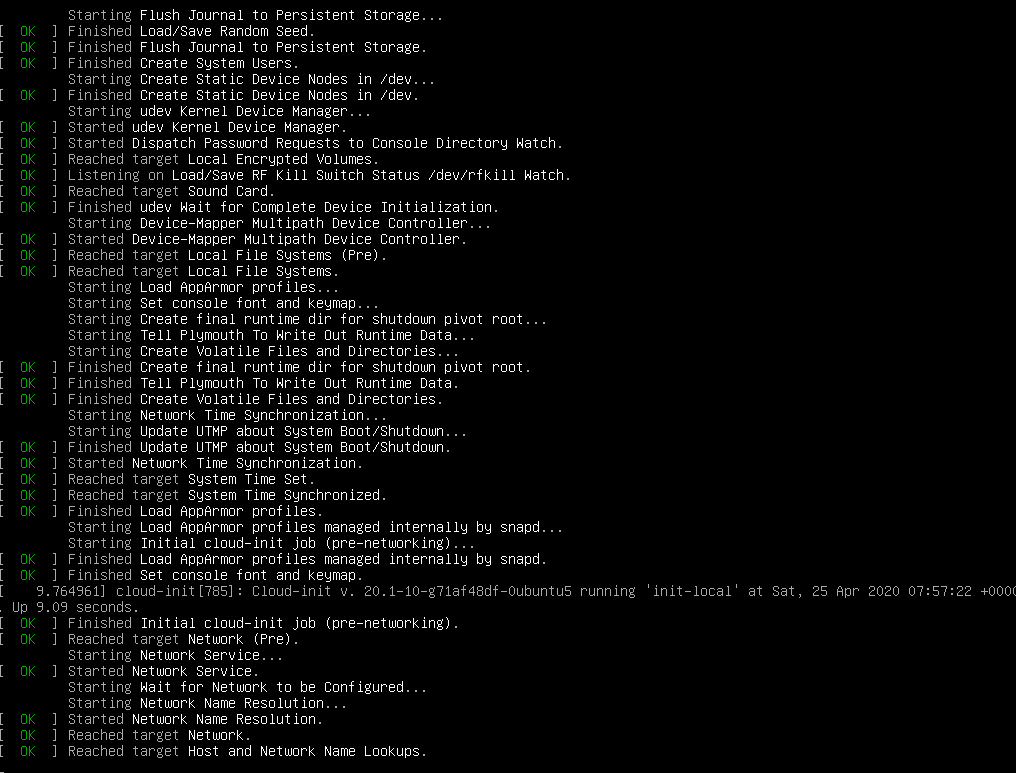

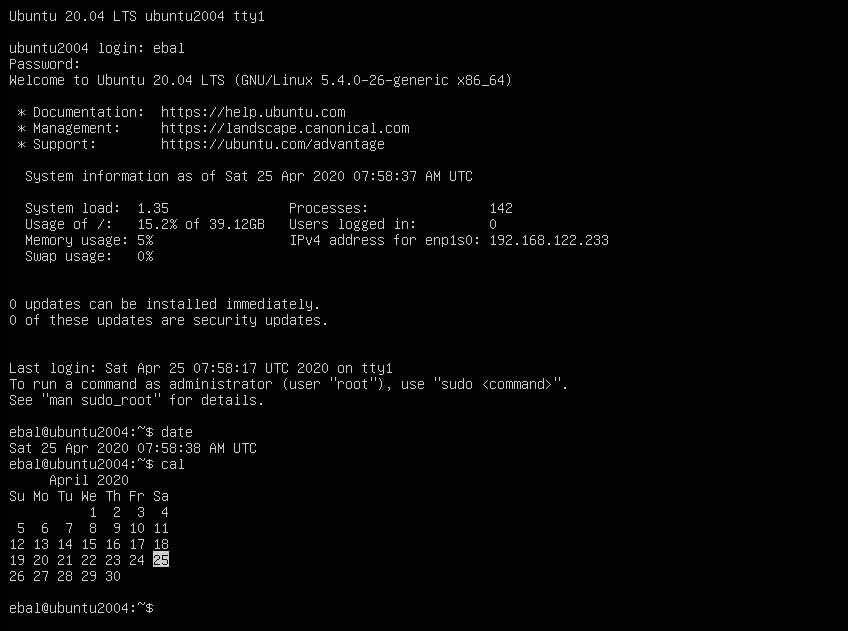

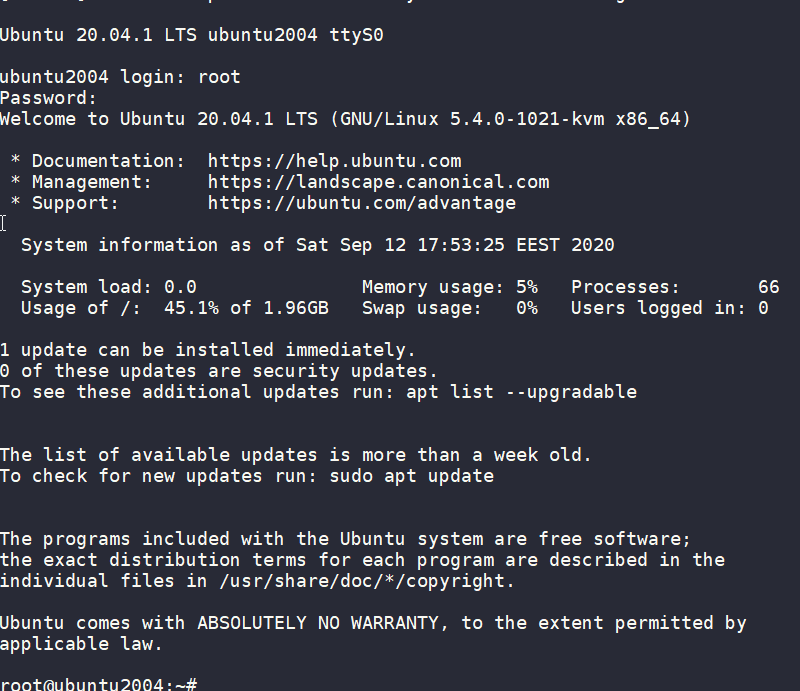

$ virsh -c qemu:///system console ubuntu-2004-vm

Connected to domain ubuntu-2004-vm

Escape character is ^] (Ctrl + ])

ubuntu2004 login: root

Password:

Welcome to Ubuntu 20.04.1 LTS (GNU/Linux 5.4.0-1021-kvm x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Sat Sep 12 18:27:46 EEST 2020

System load: 0.29 Memory usage: 6% Processes: 66

Usage of /: 9.3% of 9.52GB Swap usage: 0% Users logged in: 0

0 updates can be installed immediately.

0 of these updates are security updates.

Failed to connect to https://changelogs.ubuntu.com/meta-release-lts. Check your Internet connection or proxy settings

Last login: Sat Sep 12 18:26:37 EEST 2020 on ttyS0

root@ubuntu2004:~# df -h /

Filesystem Size Used Avail Use% Mounted on

/dev/root 9.6G 912M 8.7G 10% /

root@ubuntu2004:~#

10G !

destroy

terraform destroy -auto-approveSwap

I would like to have a swap partition and I will use cloud init to create a swap partition.

modify user-data.yml

# Create swap partition

swap:

filename: /swap.img

size: "auto"

maxsize: 2G

test it

terraform plan -out terraform.out && terraform apply terraform.out$ virsh -c qemu:///system console ubuntu-2004-vm

Connected to domain ubuntu-2004-vm

Escape character is ^] (Ctrl + ])

root@ubuntu2004:~# free -hm

total used free shared buff/cache available

Mem: 2.0Gi 86Mi 1.7Gi 0.0Ki 188Mi 1.8Gi

Swap: 2.0Gi 0B 2.0Gi

root@ubuntu2004:~#

success !!

terraform destroy -auto-approveNetwork

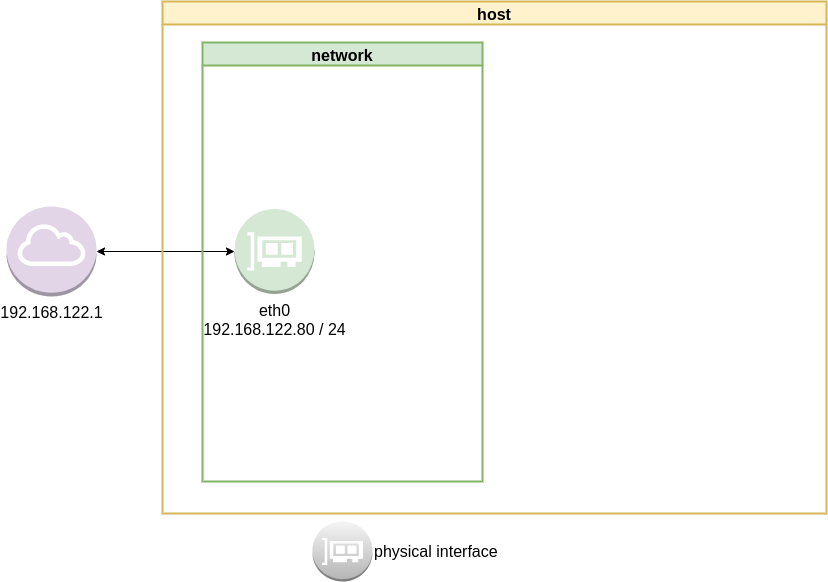

How about internet? network?

Yes, what about it ?

I guess you need to connect to the internets, okay then.

The easiest way is to add this your Domain.tf

network_interface {

network_name = "default"

}

This will use the default network libvirt resource

$ virsh -c qemu:///system net-list

Name State Autostart Persistent

----------------------------------------------------

default active yes yes

if you prefer to directly expose your VM to your local network (be very careful) then replace the above with a macvtap interface. If your ISP router provides dhcp, then your VM will take a random IP from your router.

network_interface {

macvtap = "eth0"

}

test it

terraform plan -out terraform.out && terraform apply terraform.out$ virsh -c qemu:///system console ubuntu-2004-vm

Connected to domain ubuntu-2004-vm

Escape character is ^] (Ctrl + ])

root@ubuntu2004:~#

root@ubuntu2004:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:36:66:96 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.228/24 brd 192.168.122.255 scope global dynamic ens3

valid_lft 3544sec preferred_lft 3544sec

inet6 fe80::5054:ff:fe36:6696/64 scope link

valid_lft forever preferred_lft forever

3: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: sit0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN group default qlen 1000

link/sit 0.0.0.0 brd 0.0.0.0

root@ubuntu2004:~# ping -c 5 google.com

PING google.com (172.217.23.142) 56(84) bytes of data.

64 bytes from fra16s18-in-f142.1e100.net (172.217.23.142): icmp_seq=1 ttl=115 time=43.4 ms

64 bytes from fra16s18-in-f142.1e100.net (172.217.23.142): icmp_seq=2 ttl=115 time=43.9 ms

64 bytes from fra16s18-in-f142.1e100.net (172.217.23.142): icmp_seq=3 ttl=115 time=43.0 ms

64 bytes from fra16s18-in-f142.1e100.net (172.217.23.142): icmp_seq=4 ttl=115 time=43.1 ms

64 bytes from fra16s18-in-f142.1e100.net (172.217.23.142): icmp_seq=5 ttl=115 time=43.4 ms

--- google.com ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4005ms

rtt min/avg/max/mdev = 42.977/43.346/43.857/0.311 ms

root@ubuntu2004:~#

destroy

$ terraform destroy -auto-approve

Destroy complete! Resources: 4 destroyed.

SSH

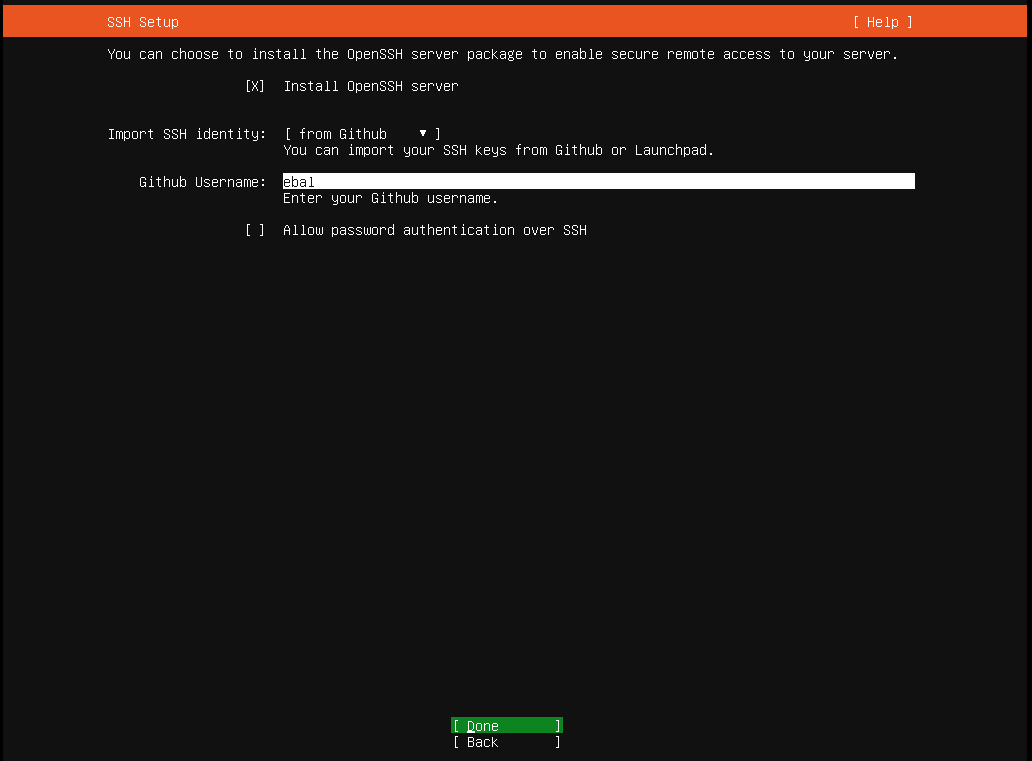

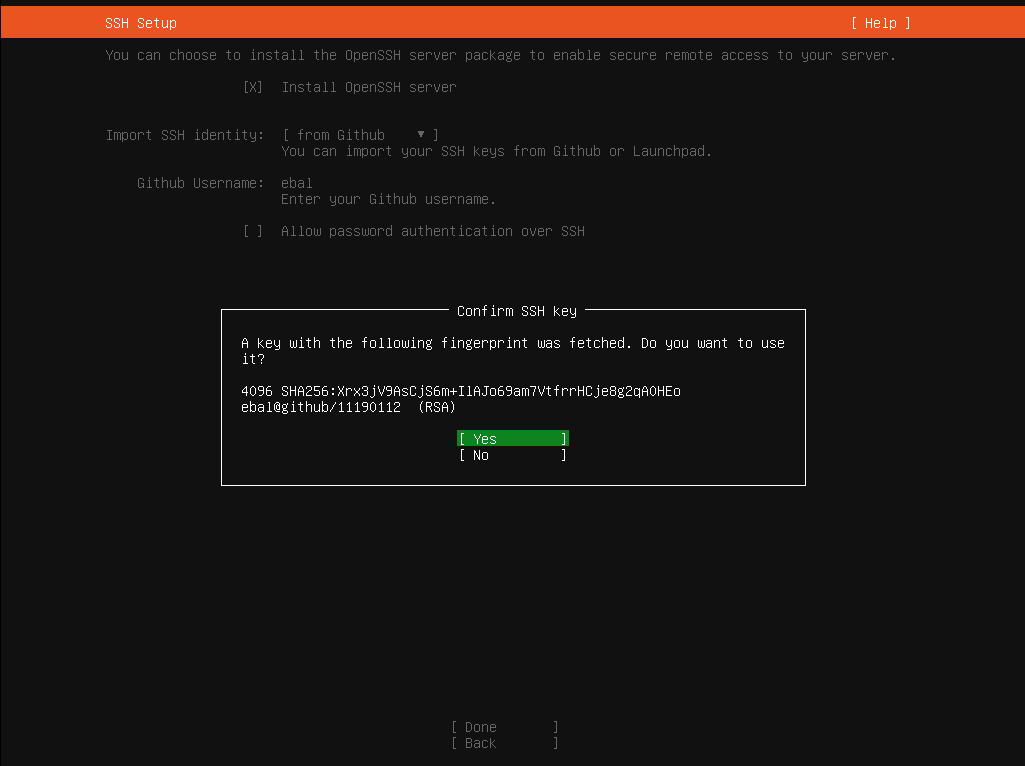

Okay, now that we have network it is possible to setup ssh to our virtual machine and also auto create a user. I would like cloud-init to get my public key from github and setup my user.

disable_root: true

ssh_pwauth: no

users:

- name: ebal

ssh_import_id:

- gh:ebal

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

write_files:

- path: /etc/ssh/sshd_config

content: |

AcceptEnv LANG LC_*

AllowUsers ebal

ChallengeResponseAuthentication no

Compression NO

MaxSessions 3

PasswordAuthentication no

PermitRootLogin no

Port "${sshdport}"

PrintMotd no

Subsystem sftp /usr/lib/openssh/sftp-server

UseDNS no

UsePAM yes

X11Forwarding no

Notice, I have added a new variable called sshdport

Variables.tf

variable "ssh_port" {

description = "The sshd port of the VM"

default = 12345

}

and do not forget to update your cloud-init tf

Cloudinit.tf

data "template_file" "user_data" {

template = file("user-data.yml")

vars = {

hostname = var.domain

sshdport = var.ssh_port

}

}

resource "libvirt_cloudinit_disk" "cloud-init" {

name = "cloud-init.iso"

user_data = data.template_file.user_data.rendered

}

Update VM

I would also like to update & install specific packages to this virtual machine:

# Install packages

packages:

- figlet

- mlocate

- python3-apt

- bash-completion

- ncdu

# Update/Upgrade & Reboot if necessary

package_update: true

package_upgrade: true

package_reboot_if_required: true

# PostInstall

runcmd:

- figlet ${hostname} > /etc/motd

- updatedb

# Firewall

- ufw allow "${sshdport}"/tcp && ufw enable

# Remove cloud-init

- apt-get -y autoremove --purge cloud-init lxc lxd snapd

- apt-get -y --purge autoremove

- apt -y autoclean

- apt -y clean all

Yes, I prefer to uninstall cloud-init at the end.

user-date.yaml

the entire user-date.yaml looks like this:

#cloud-config

disable_root: true

ssh_pwauth: no

users:

- name: ebal

ssh_import_id:

- gh:ebal

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

write_files:

- path: /etc/ssh/sshd_config

content: |

AcceptEnv LANG LC_*

AllowUsers ebal

ChallengeResponseAuthentication no

Compression NO

MaxSessions 3

PasswordAuthentication no

PermitRootLogin no

Port "${sshdport}"

PrintMotd no

Subsystem sftp /usr/lib/openssh/sftp-server

UseDNS no

UsePAM yes

X11Forwarding no

# Set TimeZone

timezone: Europe/Athens

hostname: "${hostname}"

# Create swap partition

swap:

filename: /swap.img

size: "auto"

maxsize: 2G

# Install packages

packages:

- figlet

- mlocate

- python3-apt

- bash-completion

- ncdu

# Update/Upgrade & Reboot if necessary

package_update: true

package_upgrade: true

package_reboot_if_required: true

# PostInstall

runcmd:

- figlet ${hostname} > /etc/motd

- updatedb

# Firewall

- ufw allow "${sshdport}"/tcp && ufw enable

# Remove cloud-init

- apt-get -y autoremove --purge cloud-init lxc lxd snapd

- apt-get -y --purge autoremove

- apt -y autoclean

- apt -y clean all

Output

We need to know the IP to login so create a new terraform file to get the IP

Output.tf

output "IP" {

value = libvirt_domain.ubuntu-2004-vm.network_interface.0.addresses

}

but that means that we need to wait for the dhcp lease. Modify Domain.tf to tell terraform to wait.

network_interface {

network_name = "default"

wait_for_lease = true

}

Plan & Apply

$ terraform plan -out terraform.out && terraform apply terraform.out

Outputs:

IP = [

"192.168.122.79",

]

Verify

$ ssh 192.168.122.79 -p 12345 uptime

19:33:46 up 2 min, 0 users, load average: 0.95, 0.37, 0.14

$ ssh 192.168.122.79 -p 12345

Welcome to Ubuntu 20.04.1 LTS (GNU/Linux 5.4.0-1023-kvm x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information as of Sat Sep 12 19:34:45 EEST 2020

System load: 0.31 Processes: 72

Usage of /: 33.1% of 9.52GB Users logged in: 0

Memory usage: 7% IPv4 address for ens3: 192.168.122.79

Swap usage: 0%

0 updates can be installed immediately.

0 of these updates are security updates.

_ _ ____ ___ ___ _ _

_ _| |__ _ _ _ __ | |_ _ _|___ / _ / _ | || |

| | | | '_ | | | | '_ | __| | | | __) | | | | | | | || |_

| |_| | |_) | |_| | | | | |_| |_| |/ __/| |_| | |_| |__ _|

__,_|_.__/ __,_|_| |_|__|__,_|_____|___/ ___/ |_|

Last login: Sat Sep 12 19:34:37 2020 from 192.168.122.1

ebal@ubuntu2004:~$

ebal@ubuntu2004:~$ df -h /

Filesystem Size Used Avail Use% Mounted on

/dev/root 9.6G 3.2G 6.4G 34% /

ebal@ubuntu2004:~$ free -hm

total used free shared buff/cache available

Mem: 2.0Gi 91Mi 1.7Gi 0.0Ki 197Mi 1.8Gi

Swap: 2.0Gi 0B 2.0Gi

ebal@ubuntu2004:~$ ping -c 5 libreops.cc

PING libreops.cc (185.199.108.153) 56(84) bytes of data.

64 bytes from 185.199.108.153 (185.199.108.153): icmp_seq=1 ttl=55 time=48.4 ms

64 bytes from 185.199.108.153 (185.199.108.153): icmp_seq=2 ttl=55 time=48.7 ms

64 bytes from 185.199.108.153 (185.199.108.153): icmp_seq=3 ttl=55 time=48.5 ms

64 bytes from 185.199.108.153 (185.199.108.153): icmp_seq=4 ttl=55 time=48.3 ms

64 bytes from 185.199.108.153 (185.199.108.153): icmp_seq=5 ttl=55 time=52.8 ms

--- libreops.cc ping statistics ---

5 packets transmitted, 5 received, 0% packet loss, time 4006ms

rtt min/avg/max/mdev = 48.266/49.319/52.794/1.743 ms

what !!!!

awesome

destroy

terraform destroy -auto-approveCustom Network

One last thing I would like to discuss is how to create a new network and provide a specific IP to your VM. This will separate your VMs/lab and it is cheap & easy to setup a new libvirt network.

Network.tf

resource "libvirt_network" "tf_net" {

name = "tf_net"

domain = "libvirt.local"

addresses = ["192.168.123.0/24"]

dhcp {

enabled = true

}

dns {

enabled = true

}

}

and replace network_interface in Domains.tf

network_interface {

network_id = libvirt_network.tf_net.id

network_name = "tf_net"

addresses = ["192.168.123.${var.IP_addr}"]

mac = "52:54:00:b2:2f:${var.IP_addr}"

wait_for_lease = true

}Closely look, there is a new terraform variable

Variables.tf

variable "IP_addr" {

description = "The mac & iP address for this VM"

default = 33

}$ terraform plan -out terraform.out && terraform apply terraform.out

Outputs:

IP = [

"192.168.123.33",

]

$ ssh 192.168.123.33 -p 12345

Welcome to Ubuntu 20.04.1 LTS (GNU/Linux 5.4.0-1021-kvm x86_64)

* Documentation: https://help.ubuntu.com

* Management: https://landscape.canonical.com

* Support: https://ubuntu.com/advantage

System information disabled due to load higher than 1.0

12 updates can be installed immediately.

8 of these updates are security updates.

To see these additional updates run: apt list --upgradable

Last login: Sat Sep 12 19:56:33 2020 from 192.168.123.1

ebal@ubuntu2004:~$ ip addr show ens3

2: ens3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 52:54:00:b2:2f:33 brd ff:ff:ff:ff:ff:ff

inet 192.168.123.33/24 brd 192.168.123.255 scope global dynamic ens3

valid_lft 3491sec preferred_lft 3491sec

inet6 fe80::5054:ff:feb2:2f33/64 scope link

valid_lft forever preferred_lft forever

ebal@ubuntu2004:~$

Terraform files

you can find every terraform example in my github repo

tf/0.13/libvirt/0.6.2/ubuntu/20.04 at master · ebal/tf · GitHub

That’s it!

If you like this article, consider following me on twitter ebalaskas.

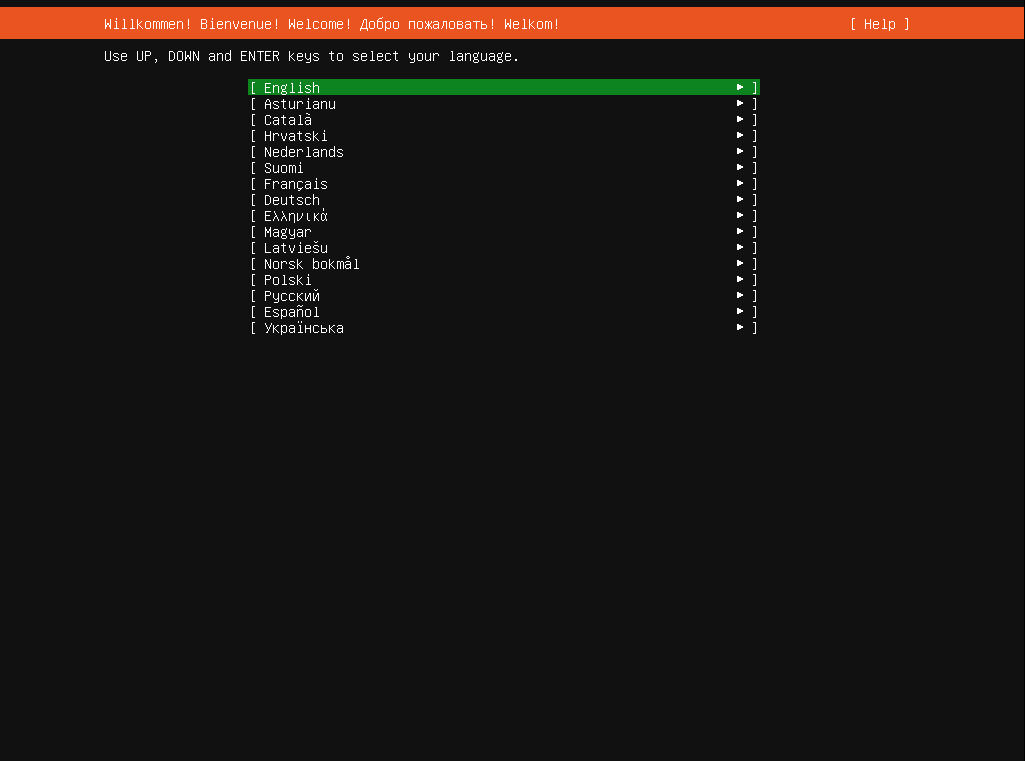

[Original Published at Linkedin on October 28, 2018]

The curse of knowledge is a cognitive bias that occurs when an individual, communicating with other individuals, unknowingly assumes that the others have the background to understand.

Let’s talk about documentation

This is the one big elephant in every team’s room.

TLDR; Increment: Documentation

Documentation empowers users and technical teams to function more effectively, and can promote approachability, accessibility, efficiency, innovation, and more stable development.

Bad technical guides can cause frustration, confusion, and distrust in your software, support channels, and even your brand—and they can hinder progress and productivity internally

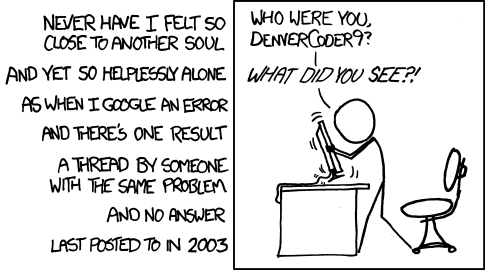

so to avoid situations like these:

or/and

documentation must exist!

Myths

- Self-documenting code

- No time to write documentation

- There are code examples, what else you need?

- There is a wiki page (or 300.000 pages).

- I’m not a professional writer

- No one reads the manual

Problems

- Maintaining the documentation (up2date)

- Incomplete or Confusing documentation

- Documentation is platform/version oriented

- Engineers who may not be fluent in English (or dont speak your language)

- Too long

- Too short

- Documentation structure

Types of documentation

- Technical Manual (system)

- Tutorial (mini tutorials)

- HowTo (mini howto)

- Library/API documentation (reference)

- Customer Documentation (end user)

- Operations manual

- User manual (support)

- Team documentation

- Project/Software documentation

- Notes

- FAQ

Why Documentation Is Important

Communication is a key to success. Documentation is part of the communication process. We either try to communicate or collaborate with our customers or even within our own team. We use our documentation to inform customers of new feautures and how to use them, to train our internal team (colleagues), collaborate with them, reach-out, help-out, connect, communicate our work with others.

When writing code, documentation should be the “One-Truth” instead of the code repository. I love working with projects that they will not deploy a new feature before updating the documentation first. For example I read the ‘Release Notes for Red Hat’ and the ChangeLog instead of reading myriads of code repositories.

Know Your Audience

Try to answer these questions:

- Who is reading this documentation ?

- Is it for internal or external users/customers ?

- Do they have a dev background ?

- Arey they non-technical people ?

Use personas to create diferrent material. Try to remember this one gold rule:

Audidence should get value from documentation (learning or something).

Guidelines

Here are some guidelines:

- Tell a story

- Use a narative voice

- Try to solve a problem

- Simplify - KISS philosophy

- Focus on approachability and usability

Even on a technical document try to:

- Write documentation agnostic - Independent Platform

- Reproducibility

- Not writing in acronyms and technical jargon (explain)

- Step Approach

- Towards goal achievement

- Routines

UX

A picture is worth a thousand words

so remember to:

- visual representation of the information architecture

- use code examples

- screencasts

- CLI –help output

- usage

- clear error messages

Customers and Users do want to write nothing.

Reducing user input, your project will be more fault tolerant.

Instead of providing a procedure for a deploy pipeline, give them a deploy bot, a next-next-install Gui/Web User-Interface and focus your documentation around that.

Content

So what to include in the documentation.

- How technical should be or not ?

- Use cases ?

- General-Purpose ?

- Article size (small pages are more manageable set to maintain).

- Minimum Viable Content Vs Too much detail

- Help them to understand

imagine your documentation as microservices instead of a huge monolith project.

Usally a well defined structure, looks like this:

- Table of Contents (toc)

- Introduction

- Short Description

- Sections / Modules / Chapters

- Conclusion / Summary

- Glossary

- Index

- References

Tools

I prefer wiki pages instead of a word-document, because of the below features:

- Version

- History

- Portability

- Convertibility

- Accountability

btw if you are using Confluence, there is a Markdown plugin.

Measurements & Feedback

To understand if your documentation is good or not, you need feedback. But first there is an evaluation process of Review. It is the same thing as writing code, you cant review your own code! The first feedback should come within your team.

Use analytics to measure if people reads your documentation, from ‘Hits per page’ to more advance analytics as Matomo (formerly Piwik). Profiling helps you understand your audience. What they like in documentation?

Customer satisfaction (CSat) are important in documentation metrics.

- Was this page helpful? Yes/No

- Allowing customers to submit comments.

- Upvote/Downvote / Like

- or even let them to contribute in your documentation

make it easy for people to share their feedbak and find a way to include their comments in it.

FAQ

Frequently Asked Questions should answering questions in the style of:

- What would customers ask ?

- What if

- How to

FAQ or QA should be really straight forward, short and simple as it can be. You are writing a FAQ because you are here to help customers to learn how to use this specific feature not the entire software. Use links for more detail info, that direct them to your main documentation.

Conclusion

Sharing knowledge & shaping the culture of your team/end users. Your documentation should reflect your customers needs. Everything you do in your business is to satisfy your customers. Documentation is one way to communicate this.

So here are some final points on documentation:

- User Oriented

- Readability

- Comprehensive

- Keep it up to date

[this is a technical blog post, but easy to follow]

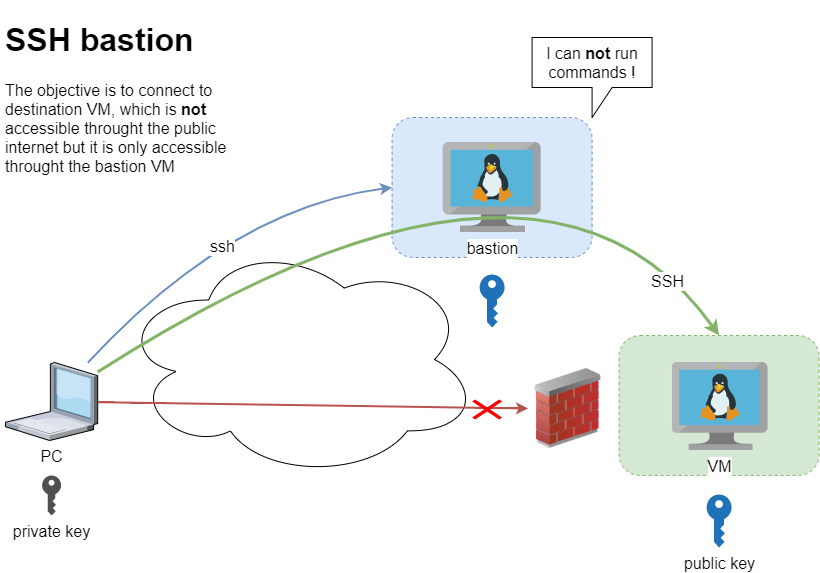

recently I had to setup and present my idea of a ssh bastion host. You may have already heard this as jump host or a security ssh hoping station or ssh gateway or even something else.

The main concept

Disclaimer: This is just a proof of concept (PoC). May need a few adjustments.

The destination VM may be on another VPC, perhaps it does not have a public DNS or even a public IP. Think of this VM as not accessible. Only the ssh bastion server can reach this VM. So we need to first reach the bastion.

SSH Config

To begin with, I will share my initial sshd_config to get an idea of my current ssh setup

AcceptEnv LANG LC_*

ChallengeResponseAuthentication no

Compression no

MaxSessions 3

PasswordAuthentication no

PermitRootLogin no

Port 12345

PrintMotd no

Subsystem sftp /usr/lib/openssh/sftp-server

UseDNS no

UsePAM yes

X11Forwarding no

AllowUsers ebal

- I only allow, user ebal to connect via ssh.

- I do not allow the root user to login via ssh.

- I use ssh keys instead of passwords.

This configuration is almost identical to both VMs

- bastion (the name of the VM that acts as a bastion server)

- VM (the name of the destination VM that is behind a DMZ/firewall)

~/.ssh/config

I am using the ssh config file to have an easier user experience when using ssh

Host bastion

Hostname 127.71.16.12

Port 12345

IdentityFile ~/.ssh/id_ed25519.vm

Host vm

Hostname 192.13.13.186

Port 23456

Host *

User ebal

ServerAliveInterval 300

ServerAliveCountMax 10

ConnectTimeout=60

Create a new user to test this

Let us create a new user for testing.

User/Group

$ sudo groupadd ebal_test

$ sudo useradd -g ebal_test -m ebal_test

$ id ebal_test

uid=1000(ebal_test) gid=1000(ebal_test) groups=1000(ebal_test)

Perms

Copy .ssh directory from current user (<== lazy sysadmin)

$ sudo cp -ravx /home/ebal/.ssh/ /home/ebal_test/

$ sudo chown -R ebal_test:ebal_test /home/ebal_test/.ssh

$ sudo ls -la ~ebal_test/.ssh/

total 12

drwxr-x---. 2 ebal_test ebal_test 4096 Sep 20 2019 .

drwx------. 3 ebal_test ebal_test 4096 Jun 23 15:56 ..

-r--r-----. 1 ebal_test ebal_test 181 Sep 20 2019 authorized_keys

$ sudo ls -ld ~ebal_test/.ssh/

drwxr-x---. 2 ebal_test ebal_test 4096 Sep 20 2019 /home/ebal_test/.ssh/

bastion sshd config

Edit the ssh daemon configuration file to append the below entries

cat /etc/ssh/sshd_config

AllowUsers ebal ebal_test

Match User ebal_test

AllowAgentForwarding no

AllowTcpForwarding yes

X11Forwarding no

PermitTunnel no

GatewayPorts no

ForceCommand echo 'This account can only be used for ProxyJump (ssh -J)'

Don’t forget to restart sshd

systemctl restart sshd

As you have seen above, I now allow two (2) users to access the ssh daemon (AllowUsers). This can also work with AllowGroups

Testing bastion

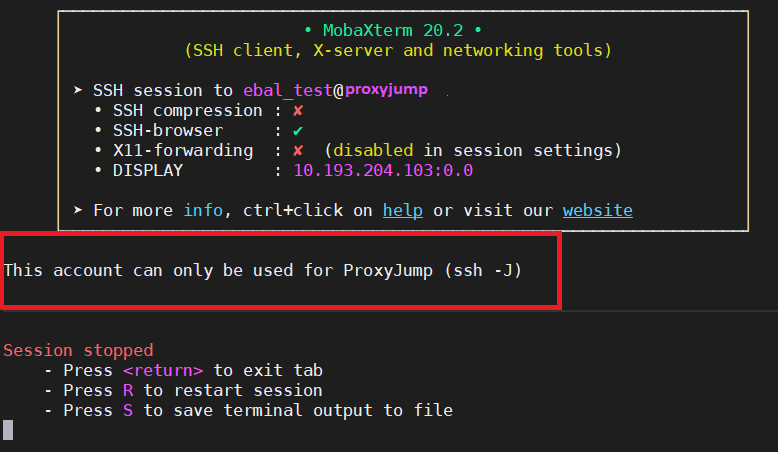

Let’s try to connect to this bastion VM

$ ssh bastion -l ebal_test uptime

This account can only be used for ProxyJump (ssh -J)$ ssh bastion -l ebal_test

This account can only be used for ProxyJump (ssh -J)

Connection to 127.71.16.12 closed.

Interesting …

We can not login into this machine.

Let’s try with our personal user

$ ssh bastion -l ebal uptime

18:49:14 up 3 days, 9:14, 0 users, load average: 0.00, 0.00, 0.00

Perfect.

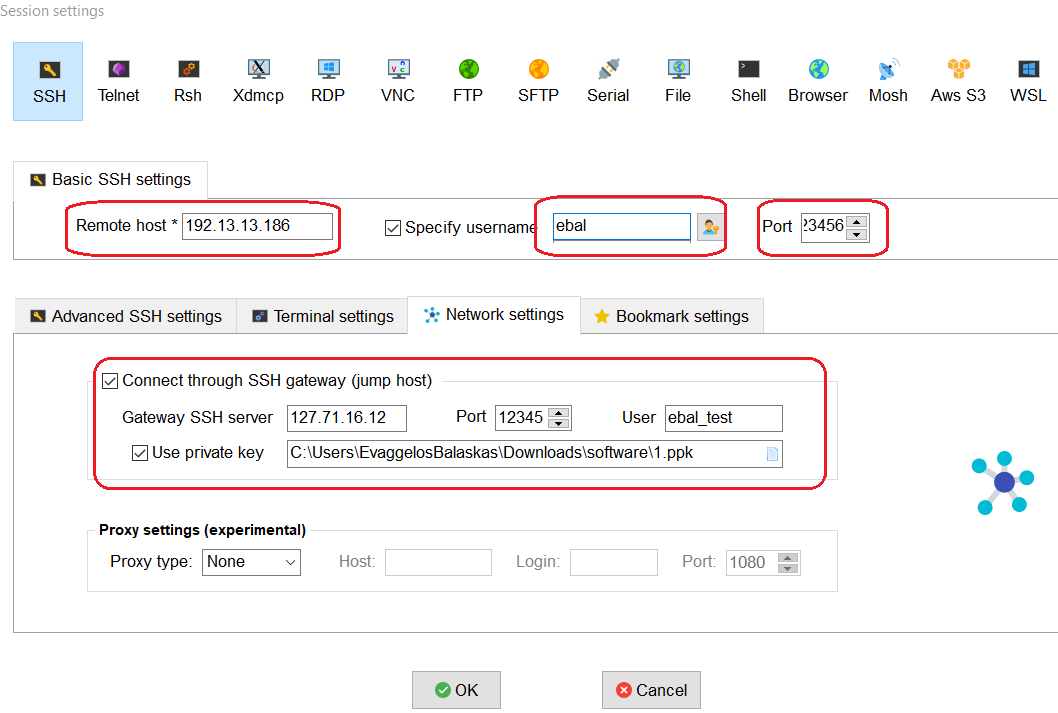

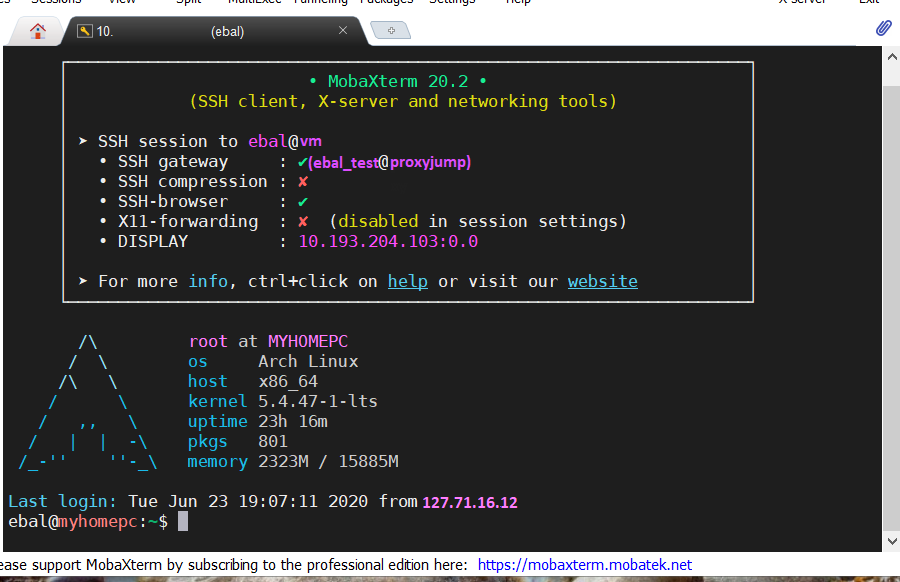

Let’s try from windows (mobaxterm)

mobaxterm is putty on steroids! There is also a portable version, so there is no need of installation. You can just download and extract it.

Interesting…

Destination VM

Now it is time to test our access to the destination VM

$ ssh VM

ssh: connect to host 192.13.13.186 port 23456: Connection refused

bastion

$ ssh -J ebal_test@bastion ebal@vm uptime

19:07:25 up 22:24, 2 users, load average: 0.00, 0.01, 0.00

$ ssh -J ebal_test@bastion ebal@vm

Last login: Tue Jun 23 19:05:29 2020 from 94.242.59.170

ebal@vm:~$

ebal@vm:~$ exit

logout

Success !

Explain Command

Using this command

ssh -J ebal_test@bastion ebal@vm

- is telling the ssh client command to use the ProxyJump feature.

- Using the user ebal_test on bastion machine and

- connect with the user ebal on vm.

So we can have different users!

ssh/config

Now, it is time to put everything under our ~./ssh/config file

Host bastion

Hostname 127.71.16.12

Port 12345

User ebal_test

IdentityFile ~/.ssh/id_ed25519.vm

Host vm

Hostname 192.13.13.186

ProxyJump bastion

User ebal

Port 23456

and try again

$ ssh vm uptime

19:17:19 up 22:33, 1 user, load average: 0.22, 0.11, 0.03

mobaxterm with bastion

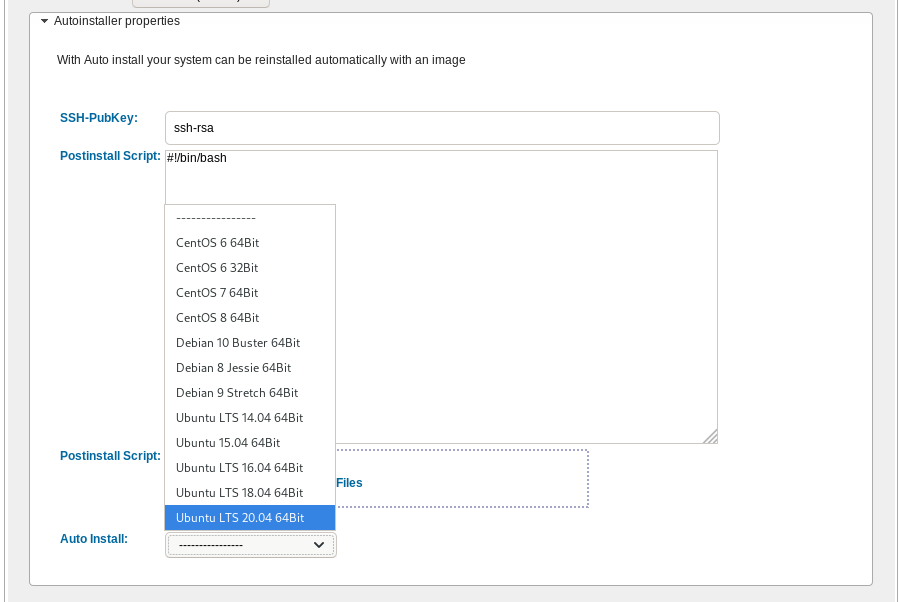

It is a known fact, that my favorite hosting provider is edis. I’ve seen them improving their services all these years, without forgeting their customers. Their support is great and I am really happy working with them.

That said, they dont offer (yet) a public infrastructre API like hetzner, linode or digitalocean but they offer an Auto Installer option to configure your VPS via a post-install shell script, put your ssh key and select your basic OS image.

I am experimenting with this option the last few weeks, but I wanted to use my currect cloud-init configuration file without making many changes. The goal is to produce a VPS image that when finished will be ready to accept my ansible roles without making any addition change or even login to this VPS.

So here is my current solution on how to use the post-install option to provide my current cloud-init configuration!

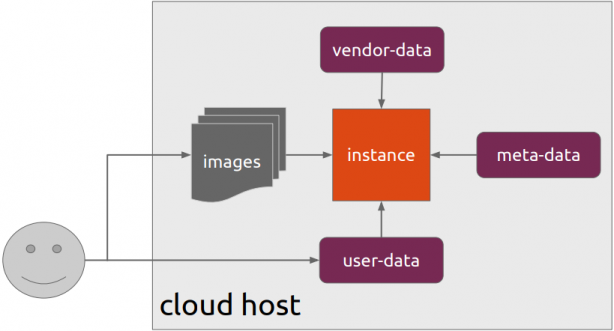

cloud-init

Josh Powers @ DebConf17

I will not get into cloud-init details in this blog post, but tldr; has stages, has modules, you provide your own user-data file (yaml) and it supports datasources. All these things is for telling cloud-init what to do, what to configure and when to configure it (in which step).

NoCloud Seed

I am going to use NoCloud datastore for this experiment.

so I need to configure these two (2) files

/var/lib/cloud/seed/nocloud-net/meta-data

/var/lib/cloud/seed/nocloud-net/user-dataInstall cloud-init

My first entry in the post-install shell script should be

apt-get update && apt-get install cloud-initthus I can be sure of two (2) things. First the VPS has already network access and I dont need to configure it, and second install cloud-init software, just to be sure that is there.

Variables

I try to keep my user-data file very simple but I would like to configure hostname and the sshd port.

HOSTNAME="complimentary"

SSHDPORT=22422

Users

Add a single user, provide a public ssh key for authentication and enable sudo access to this user.

users:

- name: ebal

ssh_import_id:

- gh:ebal

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

Hardening SSH

- Change sshd port

- Disable Root

- Disconnect Idle Sessions

- Disable Password Auth

- Disable X Forwarding

- Allow only User (or group)

write_files:

- path: /etc/ssh/sshd_config

content: |

Port $SSHDPORT

PermitRootLogin no

ChallengeResponseAuthentication no

ClientAliveInterval 600

ClientAliveCountMax 3

UsePAM yes

UseDNS no

X11Forwarding no

PrintMotd no

AcceptEnv LANG LC_*

Subsystem sftp /usr/lib/openssh/sftp-server

PasswordAuthentication no

AllowUsers ebal

enable firewall

ufw allow $SSHDPORT/tcp && ufw enableremove cloud-init

and last but not least, I need to remove cloud-init in the end

apt-get -y autoremove --purge cloud-init lxc lxd snapdPost Install Shell script

let’s put everything together

#!/bin/bash

apt-get update && apt-get install cloud-init

HOSTNAME="complimentary"

SSHDPORT=22422

mkdir -p /var/lib/cloud/seed/nocloud-net

# Meta Data

cat > /var/lib/cloud/seed/nocloud-net/meta-data <<EOF

dsmode: local

EOF

# User Data

cat > /var/lib/cloud/seed/nocloud-net/user-data <<EOF

#cloud-config

disable_root: true

ssh_pwauth: no

users:

- name: ebal

ssh_import_id:

- gh:ebal

shell: /bin/bash

sudo: ALL=(ALL) NOPASSWD:ALL

write_files:

- path: /etc/ssh/sshd_config

content: |

Port $SSHDPORT

PermitRootLogin no

ChallengeResponseAuthentication no

UsePAM yes

UseDNS no

X11Forwarding no

PrintMotd no

AcceptEnv LANG LC_*

Subsystem sftp /usr/lib/openssh/sftp-server

PasswordAuthentication no

AllowUsers ebal

# Set TimeZone

timezone: Europe/Athens

HOSTNAME: $HOSTNAME

# Install packages

packages:

- figlet

- mlocate

- python3-apt

- bash-completion

# Update/Upgrade & Reboot if necessary

package_update: true

package_upgrade: true

package_reboot_if_required: true

# PostInstall

runcmd:

- figlet $HOSTNAME > /etc/motd

- updatedb

# Firewall

- ufw allow $SSHDPORT/tcp && ufw enable

# Remove cloud-init

- apt-get -y autoremove --purge cloud-init lxc lxd snapd

- apt-get -y --purge autoremove

- apt -y autoclean

- apt -y clean all

EOF

cloud-init clean --logs

cloud-init init --local

That’s it !

After a while (needs a few reboot) our VPS is up & running and we can use ansible to configure it.

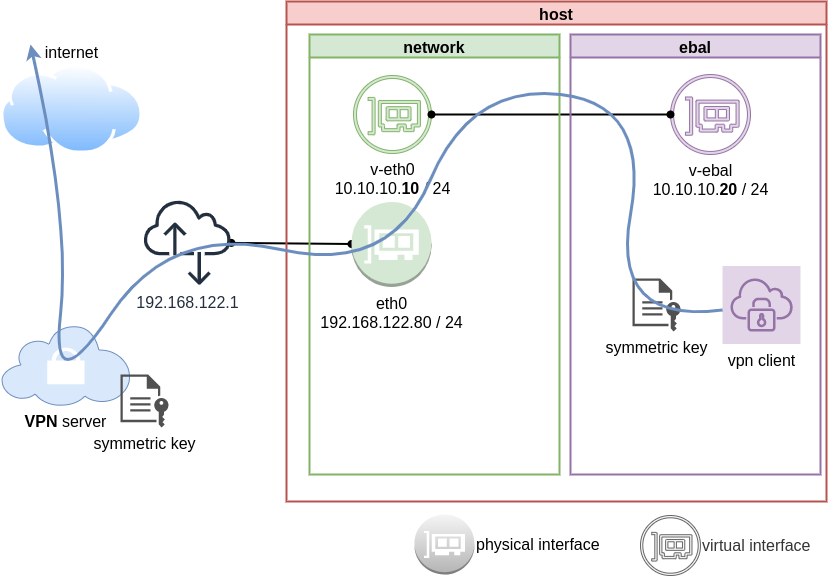

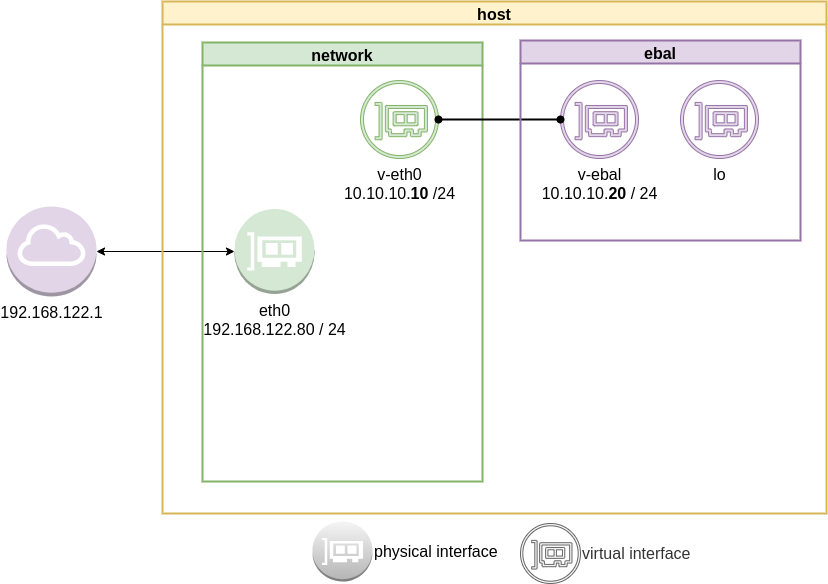

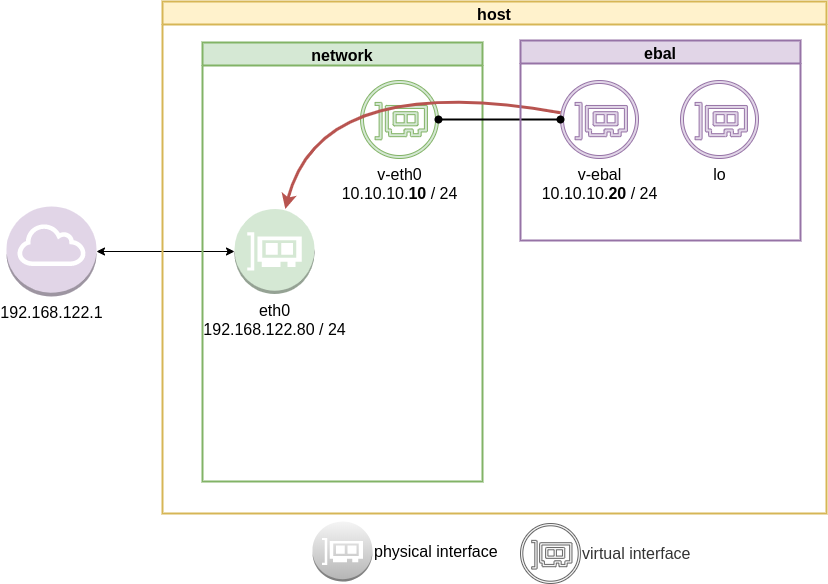

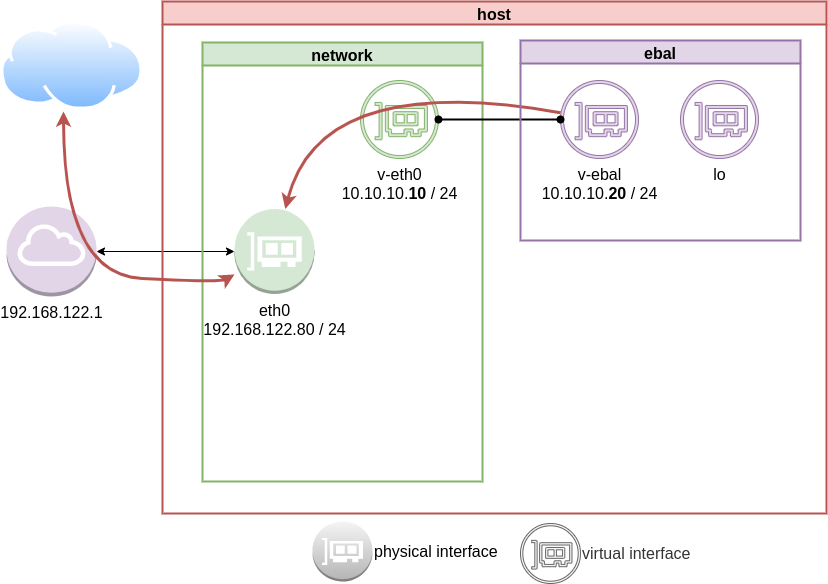

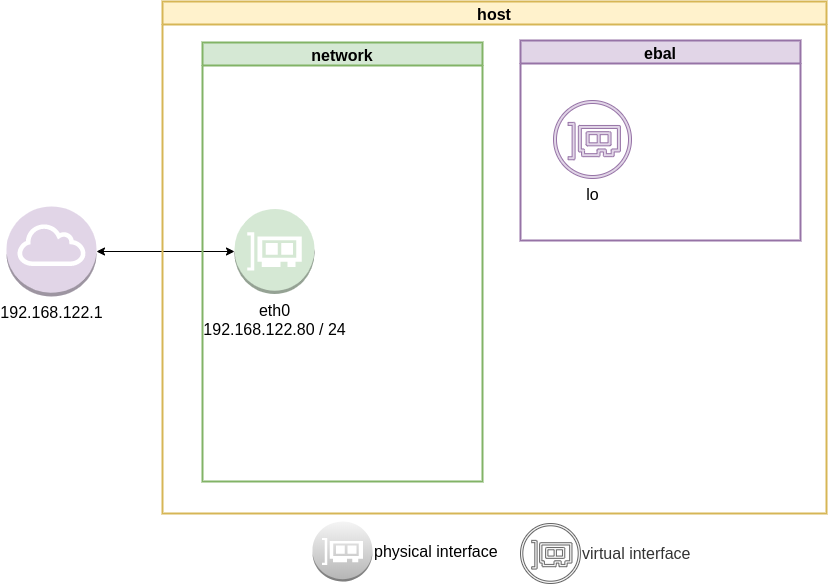

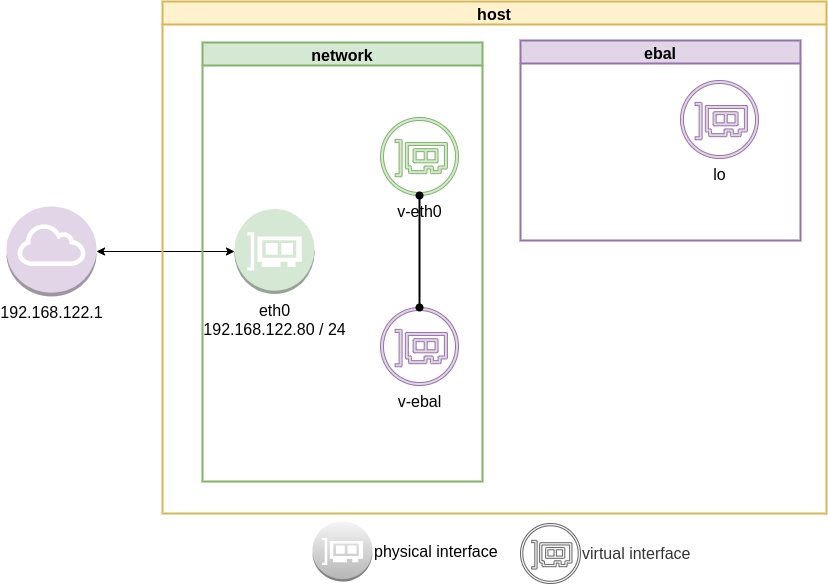

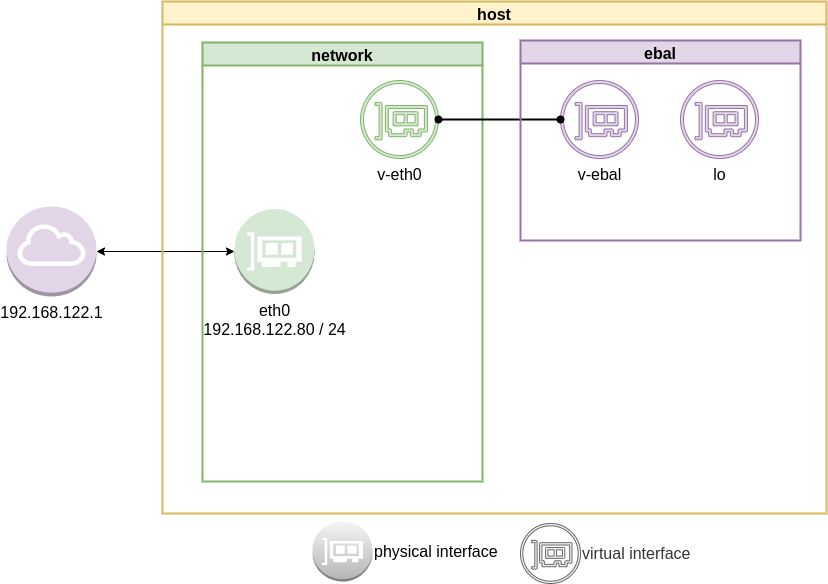

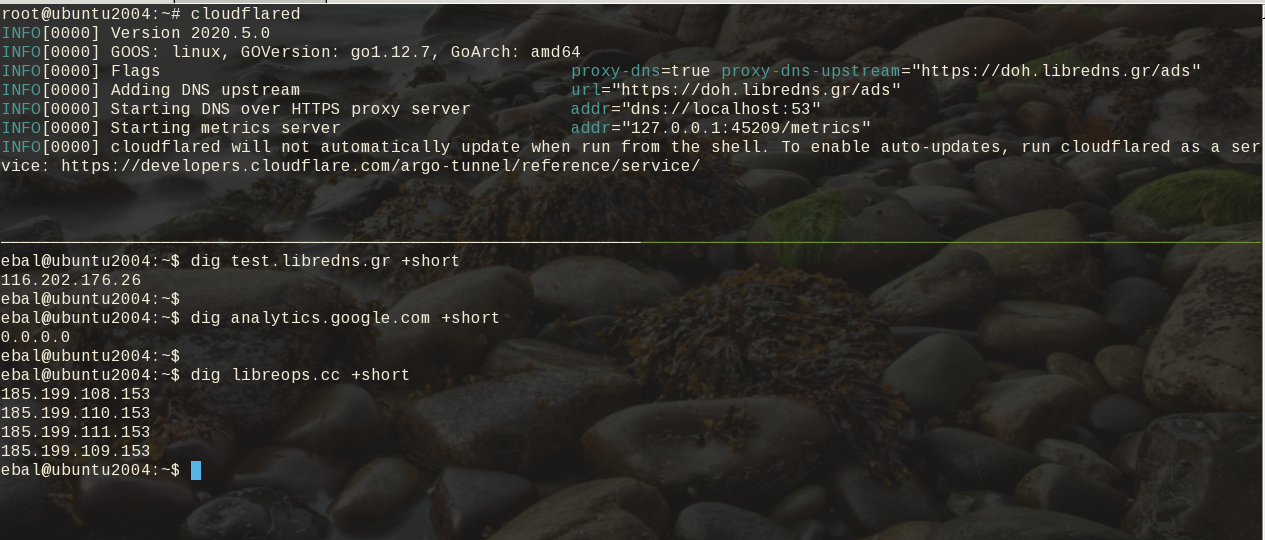

Previously on … Network Namespaces - Part Two we provided internet access to the namespace, enabled a different DNS than our system and run a graphical application (xterm/firefox) from within.

The scope of this article is to run vpn service from this namespace. We will run a vpn-client and try to enable firewall rules inside.

dsvpn

My VPN choice of preference is dsvpn and you can read in the below blog post, how to setup it.

dsvpn is a TCP, point-to-point VPN, using a symmetric key.

The instructions in this article will give you an understanding how to run a different vpn service.

Find your external IP

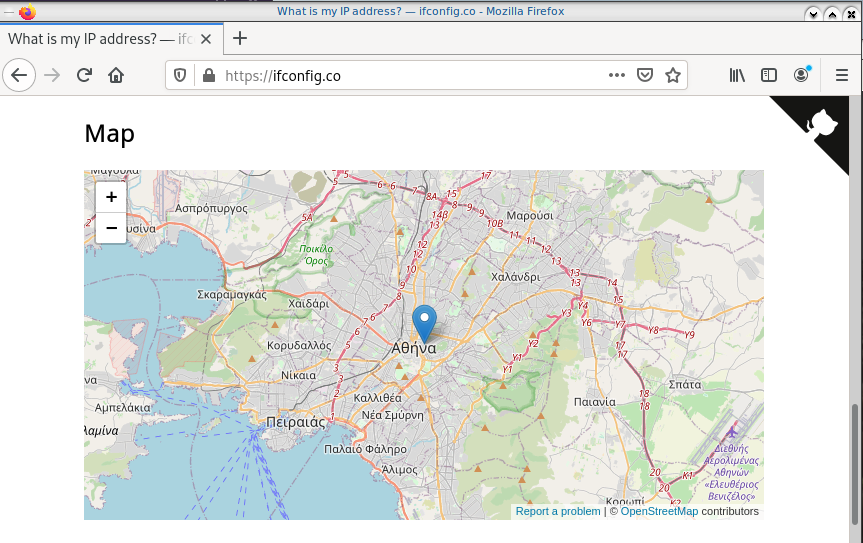

Before running the vpn client, let’s see what is our current external IP address

ip netns exec ebal curl ifconfig.co

62.103.103.103

The above IP is an example.

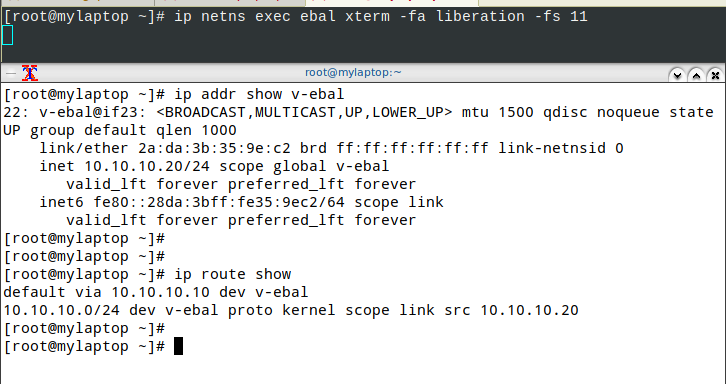

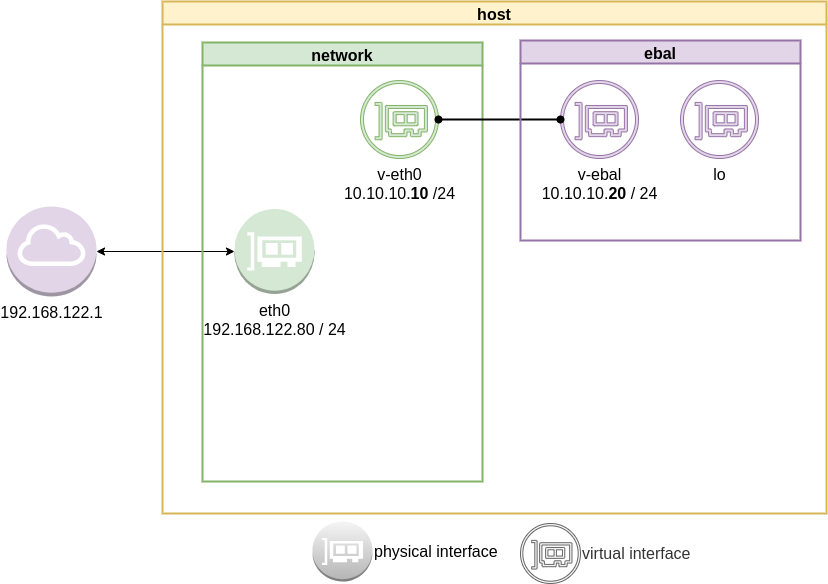

IP address and route of the namespace

ip netns exec ebal ip address show v-ebal

375: v-ebal@if376: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c2:f3:a4:8a:41:47 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.20/24 scope global v-ebal

valid_lft forever preferred_lft forever

inet6 fe80::c0f3:a4ff:fe8a:4147/64 scope link

valid_lft forever preferred_lft forever

ip netns exec ebal ip route show

default via 10.10.10.10 dev v-ebal

10.10.10.0/24 dev v-ebal proto kernel scope link src 10.10.10.20

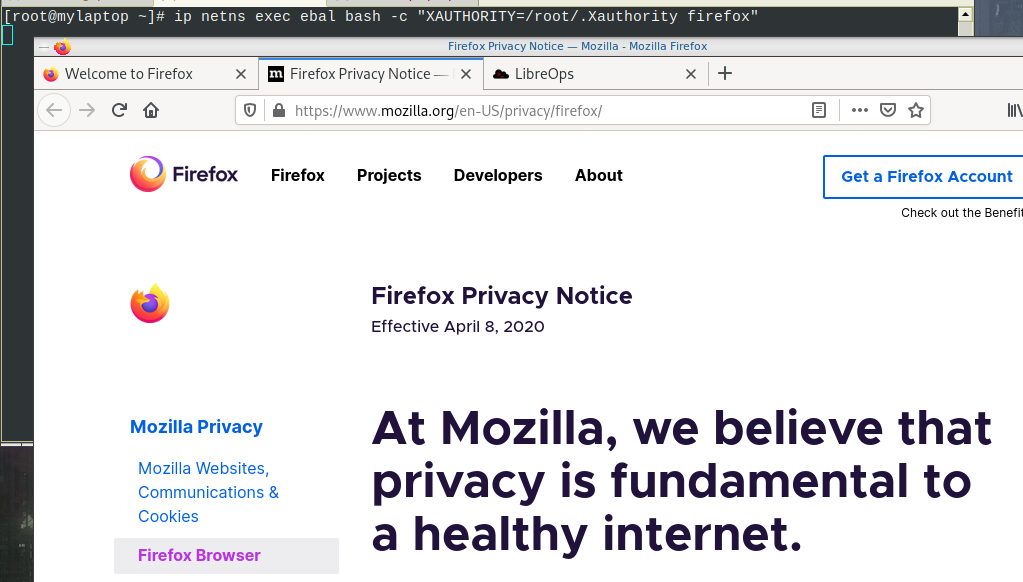

Firefox

Open firefox (see part-two) and visit ifconfig.co we noticed see that the location of our IP is based in Athens, Greece.

ip netns exec ebal bash -c "XAUTHORITY=/root/.Xauthority firefox"

Run VPN client

We have the symmetric key dsvpn.key and we know the VPN server’s IP.

ip netns exec ebal dsvpn client dsvpn.key 93.184.216.34 443

Interface: [tun0]

Trying to reconnect

Connecting to 93.184.216.34:443...

net.ipv4.tcp_congestion_control = bbr

Connected

Host

We can not see this tunnel vpn interface from our host machine

# ip link

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether 94:de:80:6a:de:0e brd ff:ff:ff:ff:ff:ff

376: v-eth0@if375: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether 1a:f7:c2:fb:60:ea brd ff:ff:ff:ff:ff:ff link-netns ebal

netns

but it exists inside the namespace, we can see tun0 interface here

ip netns exec ebal ip link

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

3: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 9000 qdisc fq_codel state UNKNOWN mode DEFAULT group default qlen 500

link/none

375: v-ebal@if376: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP mode DEFAULT group default qlen 1000

link/ether c2:f3:a4:8a:41:47 brd ff:ff:ff:ff:ff:ff link-netnsid 0

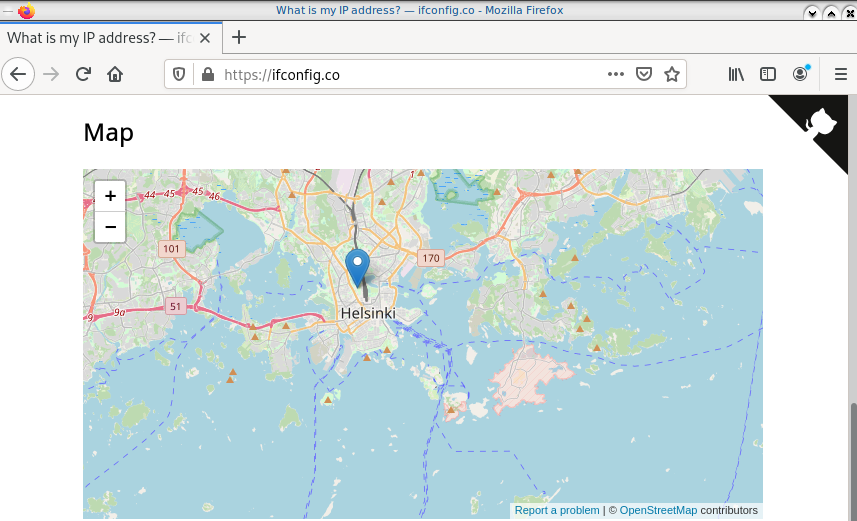

Find your external IP again

Checking your external internet IP from within the namespace

ip netns exec ebal curl ifconfig.co

93.184.216.34Firefox netns

running again firefox, we will noticed that our the location of our IP is based in Helsinki (vpn server’s location).

ip netns exec ebal bash -c "XAUTHORITY=/root/.Xauthority firefox"

systemd

We can wrap the dsvpn client under a systemcd service

[Unit]

Description=Dead Simple VPN - Client

[Service]

ExecStart=ip netns exec ebal /usr/local/bin/dsvpn client /root/dsvpn.key 93.184.216.34 443

Restart=always

RestartSec=20

[Install]

WantedBy=network.targetStart systemd service

systemctl start dsvpn.service

Verify

ip -n ebal a

1: lo: <LOOPBACK> mtu 65536 qdisc noop state DOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

4: tun0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 9000 qdisc fq_codel state UNKNOWN group default qlen 500

link/none

inet 192.168.192.1 peer 192.168.192.254/32 scope global tun0

valid_lft forever preferred_lft forever

inet6 64:ff9b::c0a8:c001 peer 64:ff9b::c0a8:c0fe/96 scope global

valid_lft forever preferred_lft forever

inet6 fe80::ee69:bdd8:3554:d81/64 scope link stable-privacy

valid_lft forever preferred_lft forever

375: v-ebal@if376: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether c2:f3:a4:8a:41:47 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 10.10.10.20/24 scope global v-ebal

valid_lft forever preferred_lft forever

inet6 fe80::c0f3:a4ff:fe8a:4147/64 scope link

valid_lft forever preferred_lft forever

ip -n ebal route

default via 10.10.10.10 dev v-ebal

10.10.10.0/24 dev v-ebal proto kernel scope link src 10.10.10.20

192.168.192.254 dev tun0 proto kernel scope link src 192.168.192.1

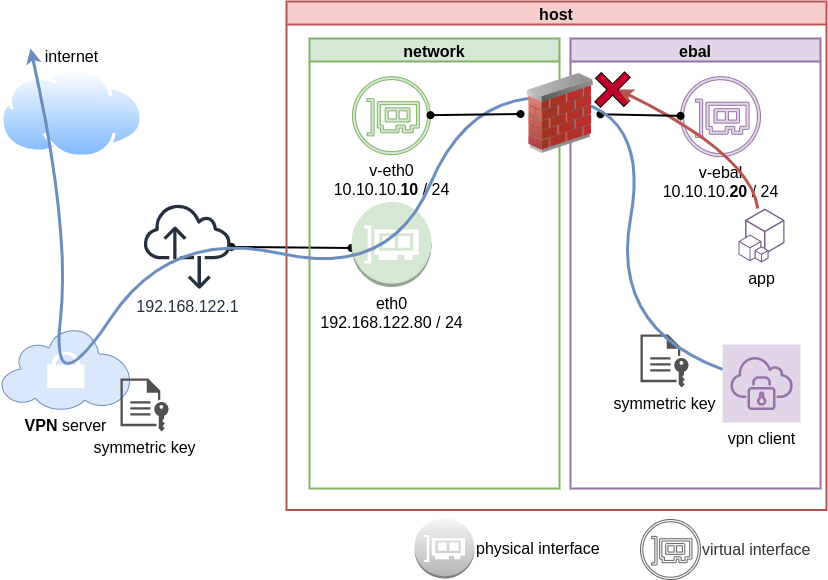

Firewall

We can also have different firewall policies for each namespace

outside

# iptables -nvL | wc -l

127

inside

ip netns exec ebal iptables -nvL

Chain INPUT (policy ACCEPT 9 packets, 2547 bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 2 packets, 216 bytes)

pkts bytes target prot opt in out source destination

So for the VPN service running inside the namespace, we can REJECT every network traffic, except the traffic towards our VPN server and of course the veth interface (point-to-point) to our host machine.

iptable rules

Enter the namespace

inside

ip netns exec ebal bash

Before

verify that iptables rules are clear

iptables -nvL

Chain INPUT (policy ACCEPT 25 packets, 7373 bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 4 packets, 376 bytes)

pkts bytes target prot opt in out source destination

Enable firewall

./iptables.netns.ebal.sh

The content of this file

## iptable rules

iptables -A INPUT -i lo -j ACCEPT

iptables -A INPUT -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

iptables -A INPUT -m conntrack --ctstate INVALID -j DROP

iptables -A INPUT -p icmp --icmp-type 8 -m conntrack --ctstate NEW -j ACCEPT

## netns - incoming

iptables -A INPUT -i v-ebal -s 10.0.0.0/8 -j ACCEPT

## Reject incoming traffic

iptables -A INPUT -j REJECT

## DSVPN

iptables -A OUTPUT -p tcp -m tcp -o v-ebal -d 93.184.216.34 --dport 443 -j ACCEPT

## net-ns outgoing

iptables -A OUTPUT -o v-ebal -d 10.0.0.0/8 -j ACCEPT

## Allow tun

iptables -A OUTPUT -o tun+ -j ACCEPT

## Reject outgoing traffic

iptables -A OUTPUT -p tcp -j REJECT --reject-with tcp-reset

iptables -A OUTPUT -p udp -j REJECT --reject-with icmp-port-unreachable

After

iptables -nvL

Chain INPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 ACCEPT all -- lo * 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- * * 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

0 0 DROP all -- * * 0.0.0.0/0 0.0.0.0/0 ctstate INVALID

0 0 ACCEPT icmp -- * * 0.0.0.0/0 0.0.0.0/0 icmptype 8 ctstate NEW

1 349 ACCEPT all -- v-ebal * 10.0.0.0/8 0.0.0.0/0

0 0 REJECT all -- * * 0.0.0.0/0 0.0.0.0/0 reject-with icmp-port-unreachable

0 0 ACCEPT all -- lo * 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- * * 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

0 0 DROP all -- * * 0.0.0.0/0 0.0.0.0/0 ctstate INVALID

0 0 ACCEPT icmp -- * * 0.0.0.0/0 0.0.0.0/0 icmptype 8 ctstate NEW

0 0 ACCEPT all -- v-ebal * 10.0.0.0/8 0.0.0.0/0

0 0 REJECT all -- * * 0.0.0.0/0 0.0.0.0/0 reject-with icmp-port-unreachable

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 ACCEPT tcp -- * v-ebal 0.0.0.0/0 95.216.215.96 tcp dpt:8443

0 0 ACCEPT all -- * v-ebal 0.0.0.0/0 10.0.0.0/8

0 0 ACCEPT all -- * tun+ 0.0.0.0/0 0.0.0.0/0