a couple years ago I wrote an article about the Tools I use daily. The last nine (9) months I am partial using win10 due to new job challenges and thus I would like to write an updated version on my previous article.

I will try to use the same structure for comparison as the previous article although this a nine to five setup (work related). So here it goes.

Operating System

I use Win10 as my primary operating system in my work laptop. I have two impediments that can not work on a laptop distribution:

- WebEx

- MS Office

We are using webex as our primary communication tool. We are sharing our screen and have our video camera on, so that we can see each other. And we have a lot of meetings that integrate with our company’s outlook. I can use OWA as an alternative but in fact it is difficult to use both of them in a linux desktop.

I have considered to use a VM but a win10 vm needs more than 4G of RAM and a couple of CPUs just to boot up. In that case, means that I have to reduce my work laptop resources for half the day, every day. So for the time being I am staying with Win10 as the primary operating system.

Desktop

Default Win10 desktop

Disk / Filesystem

Default Win10 filesystem with bitlocker. Every HW change will lock the entire system and in the past just was the case!

Dropbox as a cloud sync software, an encfs partition and syncthing for secure personal syncing files.

Mostly OWA for calendar purposes and … still thunderbird for primary reading mails.

Shell

WSL … waiting for the official WSLv2 ! This is a huge HUGE upgrade for windows. I have setup an archlinux WSL environment to continue work on a linux environment, I mean bash. I use my WSL archlinux as a jumphost to my VMs.

Editor

Using Visual Studio Code for any python (or any other) scripting code file. Vim within WSL and notepad for temp text notes. The last year I have switched to Boostnote and markdown notes.

Browser

Multiple Instances of firefox, chromium, firefox Nightly, Tor Browser and Brave

Communication

I use mostly slack and signal-desktop. We are using webex but I prefer zoom. Less and less riot-matrix.

Media

VLC for windows, what else ? Also gimp for image editing. I have switched to spotify for music and draw for diagrams. Last I use CPod for podcasts.

In conclusion

I have switched to a majority of electron applications. I use the same applications on my Linux boxes. Encrypted notes on boostnote, synced over syncthing. Same browsers, same bash/shell, the only thing I dont have on my linux boxes are webex and outlook. Consider everything else, I think it is a decent setup across every distro.

MariaDB Galera Cluster on Ubuntu 18.04.2 LTS

Last Edit: 2019 06 11

Thanks to Manolis Kartsonakis for the extra info.

Official Notes here:

MariaDB Galera Cluster

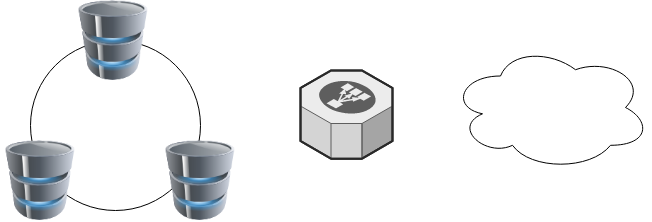

a Galera Cluster is a synchronous multi-master cluster setup. Each node can act as master. The XtraDB/InnoDB storage engine can sync its data using rsync. Each data transaction gets a Global unique Id and then using Write Set REPLication the nodes can sync data across each other. When a new node joins the cluster the State Snapshot Transfers (SSTs) synchronize full data but in Incremental State Transfers (ISTs) only the missing data are synced.

With this setup we can have:

- Data Redundancy

- Scalability

- Availability

Installation

In Ubuntu 18.04.2 LTS three packages should exist in every node.

So run the below commands in all of the nodes - change your internal IPs accordingly

as root

# apt -y install mariadb-server

# apt -y install galera-3

# apt -y install rsynchost file

as root

# echo 10.10.68.91 gal1 >> /etc/hosts

# echo 10.10.68.92 gal2 >> /etc/hosts

# echo 10.10.68.93 gal3 >> /etc/hosts

Storage Engine

Start the MariaDB/MySQL in one node and check the default storage engine. It should be

MariaDB [(none)]> show variables like 'default_storage_engine';or

echo "SHOW Variables like 'default_storage_engine';" | mysql+------------------------+--------+

| Variable_name | Value |

+------------------------+--------+

| default_storage_engine | InnoDB |

+------------------------+--------+

Architecture

A Galera Cluster should be behind a Load Balancer (proxy) and you should never talk with a node directly.

Galera Configuration

Now copy the below configuration file in all 3 nodes:

/etc/mysql/conf.d/galera.cnf[mysqld]

binlog_format=ROW

default-storage-engine=InnoDB

innodb_autoinc_lock_mode=2

bind-address=0.0.0.0

# Galera Provider Configuration

wsrep_on=ON

wsrep_provider=/usr/lib/galera/libgalera_smm.so

# Galera Cluster Configuration

wsrep_cluster_name="galera_cluster"

wsrep_cluster_address="gcomm://10.10.68.91,10.10.68.92,10.10.68.93"

# Galera Synchronization Configuration

wsrep_sst_method=rsync

# Galera Node Configuration

wsrep_node_address="10.10.68.91"

wsrep_node_name="gal1"Per Node

Be careful the last 2 lines should change to each node:

Node 01

# Galera Node Configuration

wsrep_node_address="10.10.68.91"

wsrep_node_name="gal1"Node 02

# Galera Node Configuration

wsrep_node_address="10.10.68.92"

wsrep_node_name="gal2"Node 03

# Galera Node Configuration

wsrep_node_address="10.10.68.93"

wsrep_node_name="gal3"

Galera New Cluster

We are ready to create our galera cluster:

galera_new_clusteror

mysqld --wsrep-new-clusterJournalCTL

Jun 10 15:01:20 gal1 systemd[1]: Starting MariaDB 10.1.40 database server...

Jun 10 15:01:24 gal1 sh[2724]: WSREP: Recovered position 00000000-0000-0000-0000-000000000000:-1

Jun 10 15:01:24 gal1 mysqld[2865]: 2019-06-10 15:01:24 139897056971904 [Note] /usr/sbin/mysqld (mysqld 10.1.40-MariaDB-0ubuntu0.18.04.1) starting as process 2865 ...

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2906]: Upgrading MySQL tables if necessary.

Jun 10 15:01:24 gal1 systemd[1]: Started MariaDB 10.1.40 database server.

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2909]: /usr/bin/mysql_upgrade: the '--basedir' option is always ignored

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2909]: Looking for 'mysql' as: /usr/bin/mysql

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2909]: Looking for 'mysqlcheck' as: /usr/bin/mysqlcheck

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2909]: This installation of MySQL is already upgraded to 10.1.40-MariaDB, use --force if you still need to run mysql_upgrade

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2918]: Checking for insecure root accounts.

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2922]: WARNING: mysql.user contains 4 root accounts without password or plugin!

Jun 10 15:01:24 gal1 /etc/mysql/debian-start[2923]: Triggering myisam-recover for all MyISAM tables and aria-recover for all Aria tables# ss -at '( sport = :mysql )'

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 80 127.0.0.1:mysql 0.0.0.0:* # echo "SHOW STATUS LIKE 'wsrep_%';" | mysql | egrep -i 'cluster|uuid|ready' | column -twsrep_cluster_conf_id 1

wsrep_cluster_size 1

wsrep_cluster_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_cluster_status Primary

wsrep_gcomm_uuid d67e5b7c-8b90-11e9-ba3d-23ea221848fd

wsrep_local_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_ready ON

Second Node

systemctl restart mariadb.serviceroot@gal2:~# echo "SHOW STATUS LIKE 'wsrep_%';" | mysql | egrep -i 'cluster|uuid|ready' | column -t

wsrep_cluster_conf_id 2

wsrep_cluster_size 2

wsrep_cluster_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_cluster_status Primary

wsrep_gcomm_uuid a5eaae3e-8b91-11e9-9662-0bbe68c7d690

wsrep_local_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_ready ON

Third Node

systemctl restart mariadb.serviceroot@gal3:~# echo "SHOW STATUS LIKE 'wsrep_%';" | mysql | egrep -i 'cluster|uuid|ready' | column -t

wsrep_cluster_conf_id 3

wsrep_cluster_size 3

wsrep_cluster_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_cluster_status Primary

wsrep_gcomm_uuid 013e1847-8b92-11e9-9055-7ac5e2e6b947

wsrep_local_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_ready ON

Primary Component (PC)

The last node in the cluster -in theory- has all the transactions. That means it should be the first to start next time from a power-off.

State

cat /var/lib/mysql/grastate.dateg.

# GALERA saved state

version: 2.1

uuid: 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

seqno: -1

safe_to_bootstrap: 0if safe_to_bootstrap: 1 then you can bootstrap this node as Primary.

Common Mistakes

Sometimes DBAs want to setup a new cluster (lets say upgrade into a new scheme - non compatible with the previous) so they want a clean state/directory. The most common way is to move the current mysql directory

mv /var/lib/mysql /var/lib/mysql_BAKIf you try to start your galera node, it will fail:

# systemctl restart mariadbWSREP: Failed to start mysqld for wsrep recovery:

[Warning] Can't create test file /var/lib/mysql/gal1.lower-test

Failed to start MariaDB 10.1.40 database serverYou need to create and initialize the mysql directory first:

mkdir -pv /var/lib/mysql

chown -R mysql:mysql /var/lib/mysql

chmod 0755 /var/lib/mysql

mysql_install_db -u mysqlOn another node, cluster_size = 2

# echo "SHOW STATUS LIKE 'wsrep_%';" | mysql | egrep -i 'cluster|uuid|ready' | column -t

wsrep_cluster_conf_id 4

wsrep_cluster_size 2

wsrep_cluster_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_cluster_status Primary

wsrep_gcomm_uuid a5eaae3e-8b91-11e9-9662-0bbe68c7d690

wsrep_local_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_ready ONthen:

# systemctl restart mariadbrsync from the Primary:

Jun 10 15:19:00 gal1 rsyncd[3857]: rsyncd version 3.1.2 starting, listening on port 4444

Jun 10 15:19:01 gal1 rsyncd[3884]: connect from gal3 (192.168.122.93)

Jun 10 15:19:01 gal1 rsyncd[3884]: rsync to rsync_sst/ from gal3 (192.168.122.93)

Jun 10 15:19:01 gal1 rsyncd[3884]: receiving file list# echo "SHOW STATUS LIKE 'wsrep_%';" | mysql | egrep -i 'cluster|uuid|ready' | column -t

wsrep_cluster_conf_id 5

wsrep_cluster_size 3

wsrep_cluster_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_cluster_status Primary

wsrep_gcomm_uuid 12afa7bc-8b93-11e9-88fc-6f41be61a512

wsrep_local_state_uuid 8abc6a1b-8adc-11e9-a42b-c6022ea4412c

wsrep_ready ONBe Aware: Try to keep your DATA directory to a seperated storage disk

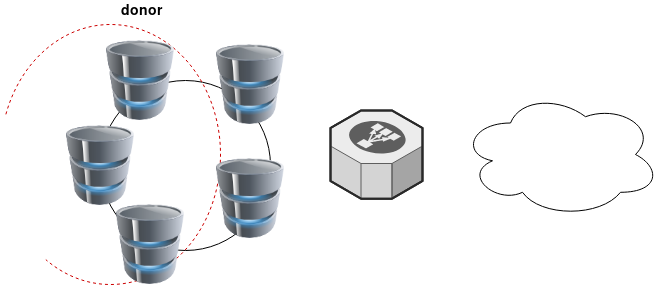

Adding new Nodes

A healthy Quorum has an odd number of nodes. So when you scale your galera gluster consider adding two (2) at every step!

# echo 10.10.68.94 gal4 >> /etc/hosts

# echo 10.10.68.95 gal5 >> /etc/hostsData Replication will lock your donor-node so it is best to put-off your donor-node from your Load Balancer:

Then explicit point your donor-node to your new nodes by adding the below line in your configuration file:

wsrep_sst_donor= gal3After the synchronization:

- comment-out the above line

- restart mysql service and

- put all three nodes behind the Local Balancer

Split Brain

Find the node with the max

SHOW STATUS LIKE 'wsrep_last_committed';

and set it as master by

SET GLOBAL wsrep_provider_options='pc.bootstrap=YES';

Weighted Quorum for Three Nodes

When configuring quorum weights for three nodes, use the following pattern:

node1: pc.weight = 4

node2: pc.weight = 3

node3: pc.weight = 2

node4: pc.weight = 1

node5: pc.weight = 0eg.

SET GLOBAL wsrep_provider_options="pc.weight=3";

In the same VPC setting up pc.weight will avoid a split brain situation. In different regions, you can setup something like this:

node1: pc.weight = 2

node2: pc.weight = 2

node3: pc.weight = 2

<->

node4: pc.weight = 1

node5: pc.weight = 1

node6: pc.weight = 1

WSREP_SST: [ERROR] Error while getting data from donor node

In cases that a specific node can not sync/join the cluster with the above error, we can use this workaround

Change wsrep_sst_method to rsync from xtrabackup , do a restart and check the logs.

Then revert the change back to xtrabackup

/etc/mysql/conf.d/galera.cnf:;wsrep_sst_method = rsync

/etc/mysql/conf.d/galera.cnf:wsrep_sst_method = xtrabackupSTATUS

echo "SHOW STATUS LIKE 'wsrep_%';" | mysql | grep -Ei 'cluster|uuid|ready|commit' | column -tTIL: arch-audit

In archlinux there is a package named: arch-audit that is

an utility like pkg-audit based on Arch CVE Monitoring Team data.

Install

# pacman -Ss arch-audit

community/arch-audit 0.1.10-1

# sudo pacman -S arch-audit

resolving dependencies...

looking for conflicting packages...

Package (1) New Version Net Change Download Size

community/arch-audit 0.1.10-1 1.96 MiB 0.57 MiB

Total Download Size: 0.57 MiB

Total Installed Size: 1.96 MiB

Run

# arch-auditPackage docker is affected by CVE-2018-15664. High risk!

Package gettext is affected by CVE-2018-18751. High risk!

Package glibc is affected by CVE-2019-9169, CVE-2019-5155, CVE-2018-20796, CVE-2016-10739. High risk!

Package libarchive is affected by CVE-2019-1000020, CVE-2019-1000019, CVE-2018-1000880, CVE-2018-1000879, CVE-2018-1000878, CVE-2018-1000877. High risk!

Package libtiff is affected by CVE-2019-7663, CVE-2019-6128. Medium risk!

Package linux-lts is affected by CVE-2018-5391, CVE-2018-3646, CVE-2018-3620, CVE-2018-3615, CVE-2018-8897, CVE-2017-8824, CVE-2017-17741, CVE-2017-17450, CVE-2017-17448, CVE-2017-16644, CVE-2017-5753, CVE-2017-5715, CVE-2018-1121, CVE-2018-1120, CVE-2017-1000379, CVE-2017-1000371, CVE-2017-1000370, CVE-2017-1000365. High risk!

Package openjpeg2 is affected by CVE-2019-6988. Low risk!

Package python-yaml is affected by CVE-2017-18342. High risk!. Update to 5.1-1 from testing repos!

Package sdl is affected by CVE-2019-7638, CVE-2019-7637, CVE-2019-7636, CVE-2019-7635, CVE-2019-7578, CVE-2019-7577, CVE-2019-7576, CVE-2019-7575, CVE-2019-7574, CVE-2019-7573, CVE-2019-7572. High risk!

Package sdl2 is affected by CVE-2019-7638, CVE-2019-7637, CVE-2019-7636, CVE-2019-7635, CVE-2019-7578, CVE-2019-7577, CVE-2019-7576, CVE-2019-7575, CVE-2019-7574, CVE-2019-7573, CVE-2019-7572. High risk!

Package unzip is affected by CVE-2018-1000035. Low risk!

Open Telekom Cloud (OTC) is Deutsche Telekom’s Cloud for Business Customers. I would suggest to visit the Cloud Infrastructure & Cloud Platform Solutions for more information but I will try to keep this a technical post.

In this post you will find my personal notes on how to use the native python openstack CLI client to access OTC from your console.

Notes are based on Ubuntu 18.04.2 LTS

Virtual Environment

Create an isolated python virtual environment (directory) to setup everything under there:

~> mkdir -pv otc/ && cd otc/

mkdir: created directory 'otc/'

~> virtualenv -p `which python3` .

Already using interpreter /usr/bin/python3

Using base prefix '/usr'

New python executable in /home/ebal/otc/bin/python3

Also creating executable in /home/ebal/otc/bin/python

Installing setuptools, pkg_resources, pip, wheel...done.~> source bin/activate

(otc) ebal@ubuntu:otc~>

(otc) ebal@ubuntu:otc~> python -V

Python 3.6.7

OpenStack Dependencies

Install python openstack dependencies

- openstack sdk

(otc) ebal@ubuntu:otc~> pip install python-openstacksdk

Collecting python-openstacksdk

Collecting openstacksdk (from python-openstacksdk)

Downloading https://files.pythonhosted.org/packages/

...- openstack client

(otc) ebal@ubuntu:otc~> pip install python-openstackclient

Collecting python-openstackclient

Using cached https://files.pythonhosted.org/packages

...

Install OTC Extensions

It’s time to install the otcextensions

(otc) ebal@ubuntu:otc~> pip install otcextensions

Collecting otcextensions

Requirement already satisfied: openstacksdk>=0.19.0 in ./lib/python3.6/site-packages (from otcextensions) (0.30.0)

...

Installing collected packages: otcextensions

Successfully installed otcextensions-0.6.3

List

(otc) ebal@ubuntu:otc~> pip list | egrep '^python|otc'

otcextensions 0.6.3

python-cinderclient 4.2.0

python-glanceclient 2.16.0

python-keystoneclient 3.19.0

python-novaclient 14.1.0

python-openstackclient 3.18.0

python-openstacksdk 0.5.2

Authentication

Create a new clouds.yaml with your OTC credentials

template:

clouds:

otc:

auth:

username: 'USER_NAME'

password: 'PASS'

project_name: 'eu-de'

auth_url: 'https://iam.eu-de.otc.t-systems.com:443/v3'

user_domain_name: 'OTC00000000001000000xxx'

interface: 'public'

identity_api_version: 3 # !Important

ak: 'AK_VALUE' # AK/SK pair for access to OBS

sk: 'SK_VAL

OTC Connect

Let’s tested it !

(otc) ebal@ubuntu:otc~> openstack --os-cloud otc server list

+--------------------------------------+---------------+---------+-------------------------------------------------------------------+-----------------------------------+---------------+

| ID | Name | Status | Networks | Image | Flavor |

+--------------------------------------+---------------+---------+-------------------------------------------------------------------+-----------------------------------+---------------+

| XXXXXXXX-1234-4d7f-8097-YYYYYYYYYYYY | app00-prod | ACTIVE | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.72.110 | Standard_Ubuntu_16.04_latest | s2.large.2 |

| XXXXXXXX-1234-4f7d-beaa-YYYYYYYYYYYY | app01-prod | ACTIVE | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.64.95 | Standard_Ubuntu_16.04_latest | s2.medium.2 |

| XXXXXXXX-1234-4088-baa8-YYYYYYYYYYYY | app02-prod | ACTIVE | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.100.76.160 | Standard_Ubuntu_16.04_latest | s2.large.4 |

| XXXXXXXX-1234-43f5-8a10-YYYYYYYYYYYY | web00-prod | ACTIVE | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.100.76.121 | Standard_Ubuntu_16.04_latest | s2.xlarge.2 |

| XXXXXXXX-1234-41eb-aa0b-YYYYYYYYYYYY | web01-prod | ACTIVE | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.100.76.151 | Standard_Ubuntu_16.04_latest | s2.large.4 |

| XXXXXXXX-1234-41f7-98ff-YYYYYYYYYYYY | web00-stage | ACTIVE | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.100.76.150 | Standard_Ubuntu_16.04_latest | s2.large.4 |

| XXXXXXXX-1234-41b2-973f-YYYYYYYYYYYY | web01-stage | ACTIVE | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.100.76.120 | Standard_Ubuntu_16.04_latest | s2.xlarge.2 |

| XXXXXXXX-1234-468f-a41c-YYYYYYYYYYYY | app00-stage | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.70.111 | Community_Ubuntu_14.04_TSI_latest | s2.xlarge.2 |

| XXXXXXXX-1234-4fdf-8b4c-YYYYYYYYYYYY | app01-stage | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.100.64.92 | Community_Ubuntu_14.04_TSI_latest | s1.large |

| XXXXXXXX-1234-4e68-a86d-YYYYYYYYYYYY | app02-stage | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.100.66.96 | Community_Ubuntu_14.04_TSI_latest | s2.xlarge.4 |

| XXXXXXXX-1234-475d-9a66-YYYYYYYYYYYY | web00-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.102.76.11, 10.44.23.18 | Community_Ubuntu_14.04_TSI_latest | c1.medium |

| XXXXXXXX-1234-4dac-a6b1-YYYYYYYYYYYY | web01-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.64.14 | Community_Ubuntu_14.04_TSI_latest | s1.xlarge |

| XXXXXXXX-1234-458e-8e21-YYYYYYYYYYYY | web02-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.64.13 | Community_Ubuntu_14.04_TSI_latest | s1.xlarge |

| XXXXXXXX-1234-42c4-b953-YYYYYYYYYYYY | k8s02-prod | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.64.12 | Community_Ubuntu_14.04_TSI_latest | s1.xlarge |

| XXXXXXXX-1234-4225-b6af-YYYYYYYYYYYY | k8s02-stage | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.64.11 | Community_Ubuntu_14.04_TSI_latest | s1.xlarge |

| XXXXXXXX-1234-4eb1-a596-YYYYYYYYYYYY | k8s02-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.102.64.14 | Community_Ubuntu_14.04_TSI_latest | s1.xlarge |

| XXXXXXXX-1234-4222-b866-YYYYYYYYYYYY | k8s03-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.102.64.13 | Community_Ubuntu_14.04_TSI_latest | s1.xlarge |

| XXXXXXXX-1234-453d-a9c5-YYYYYYYYYYYY | k8s04-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.101.64.10 | Community_Ubuntu_14.04_TSI_latest | s1.2xlarge |

| XXXXXXXX-1234-4968-a2be-YYYYYYYYYYYY | k8s05-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.102.76.14, 10.44.22.138 | Community_Ubuntu_14.04_TSI_latest | c2.2xlarge |

| XXXXXXXX-1234-4c71-a00f-YYYYYYYYYYYY | k8s07-test | SHUTOFF | XXXXXXXX-YYYY-ZZZZ-AAAA-BBBBBBCCCCCC=100.102.76.170 | Community_Ubuntu_14.04_TSI_latest | c1.medium |

+--------------------------------------+---------------+---------+-------------------------------------------------------------------+-----------------------------------+---------------+

Load Balancers

(otc) ebal@ubuntu:~/otc~> openstack --os-cloud otc loadbalancer list

+--------------------------------------+----------------------------------+----------------------------------+---------------+---------------------+----------+

| id | name | project_id | vip_address | provisioning_status | provider |

+--------------------------------------+----------------------------------+----------------------------------+---------------+---------------------+----------+

| XXXXXXXX-ad99-4de0-d885-YYYYYYYYYYYY | aaccaacbddd1111eee5555aaaaa22222 | 44444bbbbbbb4444444cccccc3333333 | 100.100.10.143 | ACTIVE | vlb |

+--------------------------------------+----------------------------------+----------------------------------+---------------+---------------------+----------+