I use Linux Software RAID for years now. It is reliable and stable (as long as your hard disks are reliable) with very few problems. One recent issue -that the daily cron raid-check was reporting- was this:

WARNING: mismatch_cnt is not 0 on /dev/md0

Raid Environment

A few details on this specific raid setup:

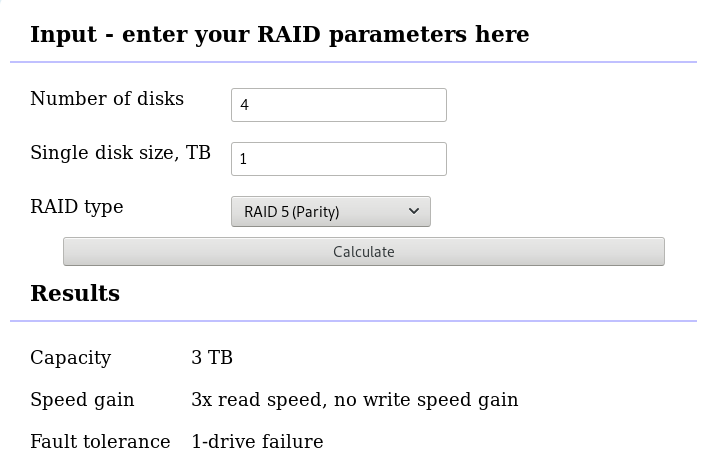

RAID 5 with 4 Drives

with 4 x 1TB hard disks and according the online raid calculator:

that means this setup is fault tolerant and cheap but not fast.

Raid Details

# /sbin/mdadm --detail /dev/md0

raid configuration is valid

/dev/md0:

Version : 1.2

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 976631296 (931.39 GiB 1000.07 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sat Oct 27 04:38:04 2018

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : ServerTwo:0 (local to host ServerTwo)

UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Events : 60352

Number Major Minor RaidDevice State

4 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

6 8 48 2 active sync /dev/sdd

5 8 0 3 active sync /dev/sda

Examine Verbose Scan

with a more detailed output:

# mdadm -Evvvvs

there are a few Bad Blocks, although it is perfectly normal for a two (2) year disks to have some. smartctl is a tool you need to use from time to time.

/dev/sdd:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953266096 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258984 sectors, after=3504 sectors

State : clean

Device UUID : bdd41067:b5b243c6:a9b523c4:bc4d4a80

Update Time : Sun Oct 28 09:04:01 2018

Bad Block Log : 512 entries available at offset 72 sectors

Checksum : 6baa02c9 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 2

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

/dev/sde:

MBR Magic : aa55

Partition[0] : 8388608 sectors at 2048 (type 82)

Partition[1] : 226050048 sectors at 8390656 (type 83)

/dev/sdc:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953263024 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258992 sectors, after=3504 sectors

State : clean

Device UUID : a90e317e:43848f30:0de1ee77:f8912610

Update Time : Sun Oct 28 09:04:01 2018

Checksum : 30b57195 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 1

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

/dev/sdb:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953263024 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258984 sectors, after=3504 sectors

State : clean

Device UUID : ad7315e5:56cebd8c:75c50a72:893a63db

Update Time : Sun Oct 28 09:04:01 2018

Bad Block Log : 512 entries available at offset 72 sectors

Checksum : b928adf1 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 0

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

/dev/sda:

Magic : a92b4efc

Version : 1.2

Feature Map : 0x0

Array UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Name : ServerTwo:0 (local to host ServerTwo)

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Raid Devices : 4

Avail Dev Size : 1953263024 (931.39 GiB 1000.07 GB)

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 1953262592 (931.39 GiB 1000.07 GB)

Data Offset : 259072 sectors

Super Offset : 8 sectors

Unused Space : before=258984 sectors, after=3504 sectors

State : clean

Device UUID : f4e1da17:e4ff74f0:b1cf6ec8:6eca3df1

Update Time : Sun Oct 28 09:04:01 2018

Bad Block Log : 512 entries available at offset 72 sectors

Checksum : bbe3e7e8 - correct

Events : 60355

Layout : left-symmetric

Chunk Size : 512K

Device Role : Active device 3

Array State : AAAA ('A' == active, '.' == missing, 'R' == replacing)

MisMatch Warning

WARNING: mismatch_cnt is not 0 on /dev/md0

So this is not a critical error, rather tells us that there are a few blocks that are “Not Synced Yet” across all disks.

Status

Checking the Multiple Device (md) driver status:

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]We verify that none job is running on the raid.

Repair

We can run a manual repair job:

# echo repair >/sys/block/md0/md/sync_action

now status looks like:

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[=========>...........] resync = 45.6% (445779112/976631296) finish=54.0min speed=163543K/sec

unused devices: <none>

Progress

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[============>........] resync = 63.4% (619673060/976631296) finish=38.2min speed=155300K/sec

unused devices: <none>Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[================>....] resync = 81.9% (800492148/976631296) finish=21.6min speed=135627K/sec

unused devices: <none>

Finally

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>

Check

After repair is it useful to check again the status of our software raid:

# echo check >/sys/block/md0/md/sync_action

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[=>...................] check = 9.5% (92965776/976631296) finish=91.0min speed=161680K/sec

unused devices: <none>and finally

# cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdc[1] sda[5] sdd[6] sdb[4]

2929893888 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

unused devices: <none>The problem

The last couple weeks, a backup server I am managing is failing to make backups!

The backup procedure (a script via cron daemon) is to rsync data from a primary server to it’s /backup directory. I was getting cron errors via email, informing me that the previous rsync script hasnt already finished when the new one was starting (by checking a lock file). This was strange as the time duration is 12hours. 12 hours werent enough to perform a ~200M data transfer over a 100Mb/s network port. That was really strange.

This is the second time in less than a year that this server is making problems. A couple months ago I had to remove a faulty disk from the software raid setup and check the system again. My notes on the matter, can be found here:

https://balaskas.gr/blog/2016/10/17/linux-raid-mdadm-md0/

Identify the problem

So let us start to identify the problem. A slow rsync can mean a lot of things, especially over ssh. Replacing network cables, viewing dmesg messages, rebooting servers or even changing the filesystem werent changing any things for the better. Time to move on the disks.

Manage and Monitor software RAID devices

On this server, I use raid5 with four hard disks:

# mdadm --verbose --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 976631296 (931.39 GiB 1000.07 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sun May 7 11:00:32 2017

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : ServerTwo:0 (local to host ServerTwo)

UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Events : 10496

Number Major Minor RaidDevice State

4 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

6 8 48 2 active sync /dev/sdd

5 8 0 3 active sync /dev/sda

View hardware parameters of hard disk drive

aka test the hard disks:

# hdparm -Tt /dev/sda

/dev/sda:

Timing cached reads: 2490 MB in 2.00 seconds = 1245.06 MB/sec

Timing buffered disk reads: 580 MB in 3.01 seconds = 192.93 MB/sec# hdparm -Tt /dev/sdb

/dev/sdb:

Timing cached reads: 2520 MB in 2.00 seconds = 1259.76 MB/sec

Timing buffered disk reads: 610 MB in 3.00 seconds = 203.07 MB/sec

# hdparm -Tt /dev/sdc

/dev/sdc:

Timing cached reads: 2512 MB in 2.00 seconds = 1255.43 MB/sec

Timing buffered disk reads: 570 MB in 3.01 seconds = 189.60 MB/sec# hdparm -Tt /dev/sdd

/dev/sdd:

Timing cached reads: 2 MB in 7.19 seconds = 285.00 kB/sec

Timing buffered disk reads: 2 MB in 5.73 seconds = 357.18 kB/secRoot Cause

Seems that one of the disks (/dev/sdd) in raid5 setup, is not performing as well as the others. The same hard disk had a problem a few months ago.

What I did the previous time, was to remove the disk, reformatting it in Low Level Format and add it again in the same setup. The system rebuild the raid5 and after 24hours everything was performing fine.

However the same hard disk seems that still has some issues . Now it is time for me to remove it and find a replacement disk.

Remove Faulty disk

I need to manually fail and then remove the faulty disk from the raid setup.

Failing the disk

Failing the disk manually, means that mdadm is not recognizing the disk as failed (as it did previously). I need to tell mdadm that this specific disk is a faulty one:

# mdadm --manage /dev/md0 --fail /dev/sdd

mdadm: set /dev/sdd faulty in /dev/md0Removing the disk

now it is time to remove the faulty disk from our raid setup:

# mdadm --manage /dev/md0 --remove /dev/sdd

mdadm: hot removed /dev/sdd from /dev/md0

Show details

# mdadm --verbose --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Array Size : 2929893888 (2794.16 GiB 3000.21 GB)

Used Dev Size : 976631296 (931.39 GiB 1000.07 GB)

Raid Devices : 4

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Sun May 7 11:08:44 2017

State : clean, degraded

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Name : ServerTwo:0 (local to host ServerTwo)

UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Events : 10499

Number Major Minor RaidDevice State

4 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

4 0 0 4 removed

5 8 0 3 active sync /dev/sda

Mounting the Backup

Now it’s time to re-mount the backup directory and re-run the rsync script

mount /backup/

and run the rsync with verbose and progress parameters to review the status of syncing

/usr/bin/rsync -zravxP --safe-links --delete-before --partial --protect-args -e ssh 192.168.2.1:/backup/ /backup/

Everything seems ok.

A replacement order has already been placed.

Rsync times manage to hit ~ 10.27MB/s again!

rsync time for a daily (12h) diff is now again in normal rates:

real 15m18.112s

user 0m34.414s

sys 0m36.850sLinux Raid

This blog post is created as a mental note for future reference

Linux Raid is the de-facto way for decades in the linux-world on how to create and use a software raid. RAID stands for: Redundant Array of Independent Disks. Some people use the I for inexpensive disks, I guess that works too!

In simple terms, you can use a lot of hard disks to behave as one disk with special capabilities!

You can use your own inexpensive/independent hard disks as long as they have the same geometry and you can do almost everything. Also it’s pretty easy to learn and use linux raid. If you dont have the same geometry, then linux raid will use the smallest one from your disks. Modern methods, like LVM and BTRFS can provide an abstract layer with more capabilities to their users, but some times (or because something you have built a loooong time ago) you need to go back to basics.

And every time -EVERY time- I am searching online for all these cool commands that those cool kids are using. Cause what’s more exciting than replacing your -a decade ago- linux raid setup this typical Saturday night?

Identify your Hard Disks

% find /sys/devices/ -type f -name model -exec cat {} \;

ST1000DX001-1CM1

ST1000DX001-1CM1

ST1000DX001-1CM1

% lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 931.5G 0 disk

sdb 8:16 0 931.5G 0 disk

sdc 8:32 0 931.5G 0 disk

% lsblk -io KNAME,TYPE,SIZE,MODEL

KNAME TYPE SIZE MODEL

sda disk 931.5G ST1000DX001-1CM1

sdb disk 931.5G ST1000DX001-1CM1

sdc disk 931.5G ST1000DX001-1CM1

Create a RAID-5 with 3 Disks

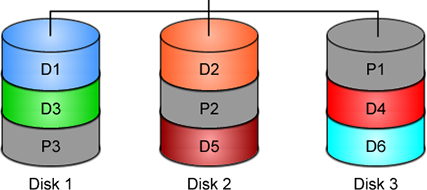

Having 3 hard disks of 1T size, we are going to use the raid-5 Level . That means that we have 2T of disk usage and the third disk with keep the parity of the first two disks. Raid5 provides us with the benefit of loosing one hard disk without loosing any data from our hard disk scheme.

% mdadm -C -v /dev/md0 --level=5 --raid-devices=3 /dev/sda /dev/sdb /dev/sdc

mdadm: layout defaults to left-symmetric

mdadm: layout defaults to left-symmetric

mdadm: chunk size defaults to 512K

mdadm: sze set to 5238784K

mdadm: Defaulting to version 1.2 metadata

md/raid:md0 raid level 5 active with 2 our of 3 devices, algorithm 2

mdadm: array /dev/md0 started.

% cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0: active raid5 sdc[3] sdb[2] sda[1]

10477568 blocks super 1.2 level 5, 512k chink, algorith 2 [3/3] [UUU]

unused devices: <none>

running lsblk will show us our new scheme:

# lsblk -io KNAME,TYPE,SIZE,MODEL

KNAME TYPE SIZE MODEL

sda disk 931.5G ST1000DX001-1CM1

md0 raid5 1.8T

sdb disk 931.5G ST1000DX001-1CM1

md0 raid5 1.8T

sdc disk 931.5G ST1000DX001-1CM1

md0 raid5 1.8T

Save the Linux Raid configuration into a file

Software linux raid means that the raid configuration is actually ON the hard disks. You can take those 3 disks and put them to another linux box and everything will be there!! If you are keeping your operating system to another harddisk, you can also change your linux distro from one to another and your data will be on your linux raid5 and you can access them without any extra software from your new linux distro.

But it is a good idea to keep the basic conf to a specific configuration file, so if you have hardware problems your machine could understand what type of linux raid level you need to have on those broken disks!

% mdadm --detail --scan >> /etc/mdadm.conf

% cat /etc/mdadm.conf

ARRAY /dev/md0 metadata=1.2 name=MyServer:0 UUID=ef5da4df:3e53572e:c3fe1191:925b24cf

UUID - Universally Unique IDentifier

Be very careful that the above UUID is the UUID of the linux raid on your disks.

We have not yet created a filesystem over this new disk /dev/md0 and if you need to add this filesystem under your fstab file you can not use the UUID of the linux raid md0 disk.

Below there is an example on my system:

% blkid

/dev/sda: UUID="ef5da4df-3e53-572e-c3fe-1191925b24cf" UUID_SUB="f4e1da17-e4ff-74f0-b1cf-6ec86eca3df1" LABEL="MyServer:0" TYPE="linux_raid_member"

/dev/sdb: UUID="ef5da4df-3e53-572e-c3fe-1191925b24cf" UUID_SUB="ad7315e5-56ce-bd8c-75c5-0a72893a63db" LABEL="MyServer:0" TYPE="linux_raid_member"

/dev/sdc: UUID="ef5da4df-3e53-572e-c3fe-1191925b24cf" UUID_SUB="a90e317e-4384-8f30-0de1-ee77f8912610" LABEL="MyServer:0" TYPE="linux_raid_member"

/dev/md0: LABEL="data" UUID="48fc963a-2128-4d35-85fb-b79e2546dce7" TYPE="ext4"

% cat /etc/fstab

UUID=48fc963a-2128-4d35-85fb-b79e2546dce7 /backup auto defaults 0 0

Replacing a hard disk

Hard disks will fail you. This is a fact that every sysadmin knows from day one. Systems will fail at some point in the future. So be prepared and keep backups !!

Failing a disk

Now it’s time to fail (if not already) the disk we want to replace:

% mdadm --manage /dev/md0 --fail /dev/sdb

mdadm: set /dev/sdb faulty in /dev/md0

Remove a broken disk

Here is a simple way to remove a broken disk from your linux raid configuration. Remember with raid5 level we can manage with 2 hard disks.

% mdadm --manage /dev/md0 --remove /dev/sdb

mdadm: hot removed /dev/sdb from /dev/md0

% cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sda[1] sdc[3]

1953262592 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

unused devices: <none>

dmesg shows:

% dmesg | tail

md: data-check of RAID array md0

md: minimum _guaranteed_ speed: 1000 KB/sec/disk.

md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for data-check.

md: using 128k window, over a total of 976631296k.

md: md0: data-check done.

md/raid:md0: Disk failure on sdb, disabling device.

md/raid:md0: Operation continuing on 2 devices.

RAID conf printout:

--- level:5 rd:3 wd:2

disk 0, o:0, dev:sda

disk 1, o:1, dev:sdb

disk 2, o:1, dev:sdc

RAID conf printout:

--- level:5 rd:3 wd:2

disk 0, o:0, dev:sda

disk 2, o:1, dev:sdc

md: unbind<sdb>

md: export_rdev(sdb)

Adding a new disk - replacing a broken one

Now it’s time to add a new and (if possible) clean hard disk. Just to be sure, I always wipe with dd the first few kilobytes of every disk with zeros.

Using mdadm to add this new disk:

# mdadm --manage /dev/md0 --add /dev/sdb

mdadm: added /dev/sdb

% cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdb[4] sda[1] sdc[3]

1953262592 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[>....................] recovery = 0.2% (2753372/976631296) finish=189.9min speed=85436K/sec

unused devices: <none>

For a 1T Hard Disk is about 3h of recovering data. Keep that in mind on scheduling the maintenance window.

after a few minutes:

% cat /proc/mdstat

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdb[4] sda[1] sdc[3]

1953262592 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[>....................] recovery = 4.8% (47825800/976631296) finish=158.3min speed=97781K/sec

unused devices: <none>

mdadm shows:

% mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Wed Feb 26 21:00:17 2014

Raid Level : raid5

Array Size : 1953262592 (1862.78 GiB 2000.14 GB)

Used Dev Size : 976631296 (931.39 GiB 1000.07 GB)

Raid Devices : 3

Total Devices : 3

Persistence : Superblock is persistent

Update Time : Mon Oct 17 21:52:05 2016

State : clean, degraded, recovering

Active Devices : 2

Working Devices : 3

Failed Devices : 0

Spare Devices : 1

Layout : left-symmetric

Chunk Size : 512K

Rebuild Status : 58% complete

Name : MyServer:0 (local to host MyServer)

UUID : ef5da4df:3e53572e:c3fe1191:925b24cf

Events : 554

Number Major Minor RaidDevice State

1 8 16 1 active sync /dev/sda

4 8 32 0 spare rebuilding /dev/sdb

3 8 48 2 active sync /dev/sdc

You can use watch command that refreshes every two seconds your terminal with the output :

# watch cat /proc/mdstat

Every 2.0s: cat /proc/mdstat Mon Oct 17 21:53:34 2016

Personalities : [raid6] [raid5] [raid4]

md0 : active raid5 sdb[4] sda[1] sdc[3]

1953262592 blocks super 1.2 level 5, 512k chunk, algorithm 2 [3/2] [_UU]

[===========>.........] recovery = 59.4% (580918844/976631296) finish=69.2min speed=95229K/sec

unused devices: <none>

Growing a Linux Raid

Even so … 2T is not a lot of disk usage these days! If you need to grow-extend your linux raid, then you need hard disks with the same geometry (or larger).

Steps on growing your linux raid are also simply:

# Umount the linux raid device:

% umount /dev/md0

# Add the new disk

% mdadm --add /dev/md0 /dev/sdd

# Check mdstat

% cat /proc/mdstat

# Grow linux raid by one device

% mdadm --grow /dev/md0 --raid-devices=4

# watch mdstat for reshaping to complete - also 3h+ something

% watch cat /proc/mdstat

# Filesystem check your linux raid device

% fsck -y /dev/md0

# Resize - Important

% resize2fs /dev/md0

But sometimes life happens …

Need 1 spare to avoid degraded array, and only have 0.

mdadm: Need 1 spare to avoid degraded array, and only have 0.

or

mdadm: Failed to initiate reshape!

Sometimes you get an error that informs you that you can not grow your linux raid device! It’s not time to panic or flee the scene. You’ve got this. You have already kept a recent backup before you started and you also reading this blog post!

You need a (an extra) backup-file !

% mdadm --grow --raid-devices=4 --backup-file=/tmp/backup.file /dev/md0

mdadm: Need to backup 3072K of critical section..

% cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid5 sda[4] sdb[0] sdd[3] sdc[1]

1953262592 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[>....................] reshape = 0.0

% (66460/976631296) finish=1224.4min speed=13292K/sec

unused devices: <none>

1224.4min seems a lot !!!

dmesg shows:

% dmesg

[ 36.477638] md: Autodetecting RAID arrays.

[ 36.477649] md: Scanned 0 and added 0 devices.

[ 36.477654] md: autorun ...

[ 36.477658] md: ... autorun DONE.

[ 602.987144] md: bind<sda>

[ 603.219025] RAID conf printout:

[ 603.219036] --- level:5 rd:3 wd:3

[ 603.219044] disk 0, o:1, dev:sdb

[ 603.219050] disk 1, o:1, dev:sdc

[ 603.219055] disk 2, o:1, dev:sdd

[ 608.650884] RAID conf printout:

[ 608.650896] --- level:5 rd:3 wd:3

[ 608.650903] disk 0, o:1, dev:sdb

[ 608.650910] disk 1, o:1, dev:sdc

[ 608.650915] disk 2, o:1, dev:sdd

[ 684.308820] RAID conf printout:

[ 684.308832] --- level:5 rd:4 wd:4

[ 684.308840] disk 0, o:1, dev:sdb

[ 684.308846] disk 1, o:1, dev:sdc

[ 684.308851] disk 2, o:1, dev:sdd

[ 684.308855] disk 3, o:1, dev:sda

[ 684.309079] md: reshape of RAID array md0

[ 684.309089] md: minimum _guaranteed_ speed: 1000 KB/sec/disk.

[ 684.309094] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for reshape.

[ 684.309105] md: using 128k window, over a total of 976631296k.

mdstat

% cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid5 sda[4] sdb[0] sdd[3] sdc[1]

1953262592 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[>....................] reshape = 0.0

% (349696/976631296) finish=697.9min speed=23313K/sec

unused devices: <none>ok it’s now 670minutes

Time to use watch:

(after a while)

% watch cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid5 sda[4] sdb[0] sdd[3] sdc[1]

1953262592 blocks super 1.2 level 5, 512k chunk, algorithm 2 [4/4] [UUUU]

[===========>......] reshape = 66.1% (646514752/976631296) finish=157.4min speed=60171K/sec

unused devices: <none>

mdadm shows:

% mdadm --detail /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Thu Feb 6 13:06:34 2014

Raid Level : raid5

Array Size : 1953262592 (1862.78 GiB 2000.14 GB)

Used Dev Size : 976631296 (931.39 GiB 1000.07 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Sat Oct 22 14:59:33 2016

State : clean, reshaping

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Reshape Status : 66% complete

Delta Devices : 1, (3->4)

Name : MyServer:0

UUID : d635095e:50457059:7e6ccdaf:7da91c9b

Events : 1536

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

3 8 48 2 active sync /dev/sdd

4 8 0 3 active sync /dev/sdabe patient and keep an aye on mdstat under proc.

So basically those are the steps, hopefuly you will find them useful.