systemd

Latest systemd version now contains the systemd-importd daemon .

That means that we can use machinectl to import a tar or a raw image from the internet to use it with the systemd-nspawn command.

so here is an example

machinectl

from my archlinux box:

# cat /etc/arch-release

Arch Linux releaseCentOS 7

We can download the tar centos7 docker image from the docker hub registry:

# machinectl pull-tar --verify=no https://github.com/CentOS/sig-cloud-instance-images/raw/79db851f4016c283fb3d30f924031f5a866d51a1/docker/centos-7-docker.tar.xz

...

Created new local image 'centos-7-docker'.

Operation completed successfully.

Exiting.we can verify that:

# ls -la /var/lib/machines/centos-7-docker

total 28

dr-xr-xr-x 1 root root 158 Jan 7 18:59 .

drwx------ 1 root root 488 Feb 1 21:17 ..

-rw-r--r-- 1 root root 11970 Jan 7 18:59 anaconda-post.log

lrwxrwxrwx 1 root root 7 Jan 7 18:58 bin -> usr/bin

drwxr-xr-x 1 root root 0 Jan 7 18:58 dev

drwxr-xr-x 1 root root 1940 Jan 7 18:59 etc

drwxr-xr-x 1 root root 0 Nov 5 2016 home

lrwxrwxrwx 1 root root 7 Jan 7 18:58 lib -> usr/lib

lrwxrwxrwx 1 root root 9 Jan 7 18:58 lib64 -> usr/lib64

drwxr-xr-x 1 root root 0 Nov 5 2016 media

drwxr-xr-x 1 root root 0 Nov 5 2016 mnt

drwxr-xr-x 1 root root 0 Nov 5 2016 opt

drwxr-xr-x 1 root root 0 Jan 7 18:58 proc

dr-xr-x--- 1 root root 120 Jan 7 18:59 root

drwxr-xr-x 1 root root 104 Jan 7 18:59 run

lrwxrwxrwx 1 root root 8 Jan 7 18:58 sbin -> usr/sbin

drwxr-xr-x 1 root root 0 Nov 5 2016 srv

drwxr-xr-x 1 root root 0 Jan 7 18:58 sys

drwxrwxrwt 1 root root 140 Jan 7 18:59 tmp

drwxr-xr-x 1 root root 106 Jan 7 18:58 usr

drwxr-xr-x 1 root root 160 Jan 7 18:58 var

systemd-nspawn

Now test we can test it:

[root@myhomepc ~]# systemd-nspawn --machine=centos-7-docker

Spawning container centos-7-docker on /var/lib/machines/centos-7-docker.

Press ^] three times within 1s to kill container.

[root@centos-7-docker ~]#

[root@centos-7-docker ~]#

[root@centos-7-docker ~]# cat /etc/redhat-release

CentOS Linux release 7.4.1708 (Core)

[root@centos-7-docker ~]#

[root@centos-7-docker ~]# exit

logout

Container centos-7-docker exited successfully.

and now returning to our system:

[root@myhomepc ~]#

[root@myhomepc ~]#

[root@myhomepc ~]# cat /etc/arch-release

Arch Linux release

Ubuntu 16.04.4 LTS

ubuntu example:

# machinectl pull-tar --verify=no https://github.com/tianon/docker-brew-ubuntu-core/raw/46511cf49ad5d2628f3e8d88e1f8b18699a3ad8f/xenial/ubuntu-xenial-core-cloudimg-amd64-root.tar.gz

# systemd-nspawn --machine=ubuntu-xenial-core-cloudimg-amd64-rootSpawning container ubuntu-xenial-core-cloudimg-amd64-root on /var/lib/machines/ubuntu-xenial-core-cloudimg-amd64-root.

Press ^] three times within 1s to kill container.

Timezone Europe/Athens does not exist in container, not updating container timezone.

root@ubuntu-xenial-core-cloudimg-amd64-root:~# root@ubuntu-xenial-core-cloudimg-amd64-root:~# cat /etc/os-release NAME="Ubuntu"

VERSION="16.04.4 LTS (Xenial Xerus)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 16.04.4 LTS"

VERSION_ID="16.04"

HOME_URL="http://www.ubuntu.com/"

SUPPORT_URL="http://help.ubuntu.com/"

BUG_REPORT_URL="http://bugs.launchpad.net/ubuntu/"

VERSION_CODENAME=xenial

UBUNTU_CODENAME=xenialroot@ubuntu-xenial-core-cloudimg-amd64-root:~# exitlogout

Container ubuntu-xenial-core-cloudimg-amd64-root exited successfully.# cat /etc/os-release NAME="Arch Linux"

PRETTY_NAME="Arch Linux"

ID=arch

ID_LIKE=archlinux

ANSI_COLOR="0;36"

HOME_URL="https://www.archlinux.org/"

SUPPORT_URL="https://bbs.archlinux.org/"

BUG_REPORT_URL="https://bugs.archlinux.org/"Docker Swarm

The native Docker Container Orchestration system is Docker Swarm that in simple terms means that you can have multiple docker machines (hosts) to run your multiple docker containers (replicas). It is best to work with Docker Engine v1.12 and above as docker engine includes docker swarm natively.

In not so simply terms, docker instances (engines) running on multiple machines (nodes), communicating together (VXLAN) as a cluster (swarm).

Nodes

To begin with, we need to create our docker machines. One of the nodes must be the manager and the others will run as workers. For testing purposes I will run three (3) docker engines:

- Manager Docker Node: myengine0

- Worker Docker Node 1: myengine1

- Worker Docker Node 2: myengine2

Drivers

A docker node is actually a machine that runs the docker engine in the swarm mode. The machine can be a physical, virtual, a virtualbox, a cloud instance, a VPS, a AWS etc etc

As the time of this blog post, officially docker supports natively the below drivers:

- Amazon Web Services

- Microsoft Azure

- Digital Ocean

- Exoscale

- Google Compute Engine

- Generic

- Microsoft Hyper-V

- OpenStack

- Rackspace

- IBM Softlayer

- Oracle VirtualBox

- VMware vCloud Air

- VMware Fusion

- VMware vSphere

QEMU - KVM

but there are unofficial drivers also.

I will use the qemu - kvm driver from this github repository: https://github.com/dhiltgen/docker-machine-kvm

The simplest way to add the kvm driver is this:

> cd /usr/local/bin/

> sudo -s

# wget -c https://github.com/dhiltgen/docker-machine-kvm/releases/download/v0.7.0/docker-machine-driver-kvm

# chmod 0750 docker-machine-driver-kvm

Docker Machines

The next thing we need to do, is to create our docker machines. Look on your distro’s repositories:

# yes | pacman -S docker-machineManager

$ docker-machine create -d kvm myengine0

Running pre-create checks...

Creating machine...

(myengine0) Image cache directory does not exist, creating it at /home/ebal/.docker/machine/cache...

(myengine0) No default Boot2Docker ISO found locally, downloading the latest release...

(myengine0) Latest release for github.com/boot2docker/boot2docker is v1.13.1

(myengine0) Downloading /home/ebal/.docker/machine/cache/boot2docker.iso from https://github.com/boot2docker/boot2docker/releases/download/v1.13.1/boot2docker.iso...

(myengine0) 0%....10%....20%....30%....40%....50%....60%....70%....80%....90%....100%

(myengine0) Copying /home/ebal/.docker/machine/cache/boot2docker.iso to /home/ebal/.docker/machine/machines/myengine0/boot2docker.iso...

Waiting for machine to be running, this may take a few minutes...

Detecting operating system of created instance...

Waiting for SSH to be available...

Detecting the provisioner...

Provisioning with boot2docker...

Copying certs to the local machine directory...

Copying certs to the remote machine...

Setting Docker configuration on the remote daemon...

Checking connection to Docker...

Docker is up and running!

To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env myengine0

Worker 1

$ docker-machine create -d kvm myengine1

Running pre-create checks...

Creating machine...

(myengine1) Copying /home/ebal/.docker/machine/cache/boot2docker.iso to /home/ebal/.docker/machine/machines/myengine1/boot2docker.iso...

Waiting for machine to be running, this may take a few minutes...

Detecting operating system of created instance...

Waiting for SSH to be available...

Detecting the provisioner...

Provisioning with boot2docker...

Copying certs to the local machine directory...

Copying certs to the remote machine...

Setting Docker configuration on the remote daemon...

Checking connection to Docker...

Docker is up and running!

To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env myengine1Worker 2

$ docker-machine create -d kvm myengine2

Running pre-create checks...

Creating machine...

(myengine2) Copying /home/ebal/.docker/machine/cache/boot2docker.iso to /home/ebal/.docker/machine/machines/myengine2/boot2docker.iso...

Waiting for machine to be running, this may take a few minutes...

Detecting operating system of created instance...

Waiting for SSH to be available...

Detecting the provisioner...

Provisioning with boot2docker...

Copying certs to the local machine directory...

Copying certs to the remote machine...

Setting Docker configuration on the remote daemon...

Checking connection to Docker...

Docker is up and running!

To see how to connect your Docker Client to the Docker Engine running on this virtual machine, run: docker-machine env myengine2

List your Machines

$ docker-machine env myengine0

export DOCKER_TLS_VERIFY="1"

export DOCKER_HOST="tcp://192.168.42.126:2376"

export DOCKER_CERT_PATH="/home/ebal/.docker/machine/machines/myengine0"

export DOCKER_MACHINE_NAME="myengine0"

# Run this command to configure your shell:

# eval $(docker-machine env myengine0)

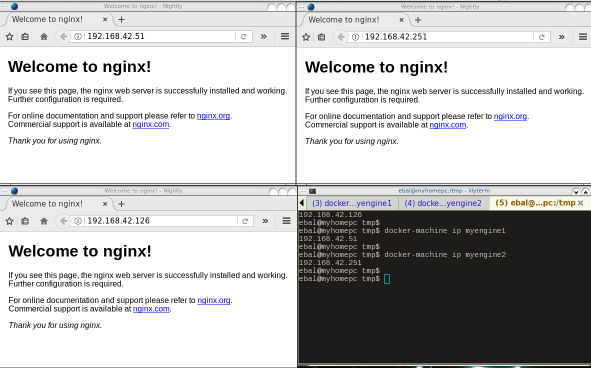

$ docker-machine ls

NAME ACTIVE DRIVER STATE URL SWARM DOCKER ERRORS

myengine0 - kvm Running tcp://192.168.42.126:2376 v1.13.1

myengine1 - kvm Running tcp://192.168.42.51:2376 v1.13.1

myengine2 - kvm Running tcp://192.168.42.251:2376 v1.13.1

Inspect

You can get the IP of your machines with:

$ docker-machine ip myengine0

192.168.42.126

$ docker-machine ip myengine1

192.168.42.51

$ docker-machine ip myengine2

192.168.42.251with ls as seen above or use the inspect parameter for a full list of information regarding your machines in a json format:

$ docker-machine inspect myengine0

If you have jq you can filter out some info

$ docker-machine inspect myengine0 | jq .'Driver.DiskPath'

"/home/ebal/.docker/machine/machines/myengine0/myengine0.img"

SSH

To enter inside the kvm docker machine, you can use ssh

Manager

$ docker-machine ssh myengine0

## .

## ## ## ==

## ## ## ## ## ===

/"""""""""""""""""___/ ===

~~~ {~~ ~~~~ ~~~ ~~~~ ~~~ ~ / ===- ~~~

______ o __/

__/

___________/

_ _ ____ _ _

| |__ ___ ___ | |_|___ __| | ___ ___| | _____ _ __

| '_ / _ / _ | __| __) / _` |/ _ / __| |/ / _ '__|

| |_) | (_) | (_) | |_ / __/ (_| | (_) | (__| < __/ |

|_.__/ ___/ ___/ __|_______,_|___/ ___|_|____|_|

Boot2Docker version 1.13.1, build HEAD : b7f6033 - Wed Feb 8 20:31:48 UTC 2017

Docker version 1.13.1, build 092cba3

Worker 1

$ docker-machine ssh myengine1

## .

## ## ## ==

## ## ## ## ## ===

/"""""""""""""""""___/ ===

~~~ {~~ ~~~~ ~~~ ~~~~ ~~~ ~ / ===- ~~~

______ o __/

__/

___________/

_ _ ____ _ _

| |__ ___ ___ | |_|___ __| | ___ ___| | _____ _ __

| '_ / _ / _ | __| __) / _` |/ _ / __| |/ / _ '__|

| |_) | (_) | (_) | |_ / __/ (_| | (_) | (__| < __/ |

|_.__/ ___/ ___/ __|_______,_|___/ ___|_|____|_|

Boot2Docker version 1.13.1, build HEAD : b7f6033 - Wed Feb 8 20:31:48 UTC 2017

Docker version 1.13.1, build 092cba3

Worker 2

$ docker-machine ssh myengine2

## .

## ## ## ==

## ## ## ## ## ===

/"""""""""""""""""___/ ===

~~~ {~~ ~~~~ ~~~ ~~~~ ~~~ ~ / ===- ~~~

______ o __/

__/

___________/

_ _ ____ _ _

| |__ ___ ___ | |_|___ __| | ___ ___| | _____ _ __

| '_ / _ / _ | __| __) / _` |/ _ / __| |/ / _ '__|

| |_) | (_) | (_) | |_ / __/ (_| | (_) | (__| < __/ |

|_.__/ ___/ ___/ __|_______,_|___/ ___|_|____|_|

Boot2Docker version 1.13.1, build HEAD : b7f6033 - Wed Feb 8 20:31:48 UTC 2017

Docker version 1.13.1, build 092cba3

Swarm Cluster

Now it’s time to build a swarm of docker machines!

Initialize the manager

docker@myengine0:~$ docker swarm init --advertise-addr 192.168.42.126

Swarm initialized: current node (jwyrvepkz29ogpcx18lgs8qhx) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join

--token SWMTKN-1-4vpiktzp68omwayfs4c3j5mrdrsdavwnewx5834g9cp6p1koeo-bgcwtrz6srt45qdxswnneb6i9

192.168.42.126:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

Join Worker 1

docker@myengine1:~$ docker swarm join

> --token SWMTKN-1-4vpiktzp68omwayfs4c3j5mrdrsdavwnewx5834g9cp6p1koeo-bgcwtrz6srt45qdxswnneb6i9

> 192.168.42.126:2377

This node joined a swarm as a worker.Join Worker 2

docker@myengine2:~$ docker swarm join

> --token SWMTKN-1-4vpiktzp68omwayfs4c3j5mrdrsdavwnewx5834g9cp6p1koeo-bgcwtrz6srt45qdxswnneb6i9

> 192.168.42.126:2377

This node joined a swarm as a worker.From the manager

docker@myengine0:~$ docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS

jwyrvepkz29ogpcx18lgs8qhx * myengine0 Ready Active Leader

m5akhw7j60fru2d0an4lnsgr3 myengine2 Ready Active

sfau3r42bqbhtz1c6v9hnld67 myengine1 Ready Active

Info

We can find more information about the docker-machines running the docker info command when you have ssh-ed the nodes:

eg. the swarm part:

manager

Swarm: active

NodeID: jwyrvepkz29ogpcx18lgs8qhx

Is Manager: true

ClusterID: 8fjv5fzp0wtq9hibl7w2v65cs

Managers: 1

Nodes: 3

Orchestration:

Task History Retention Limit: 5

Raft:

Snapshot Interval: 10000

Number of Old Snapshots to Retain: 0

Heartbeat Tick: 1

Election Tick: 3

Dispatcher:

Heartbeat Period: 5 seconds

CA Configuration:

Expiry Duration: 3 months

Node Address: 192.168.42.126

Manager Addresses:

192.168.42.126:2377

worker1

Swarm: active

NodeID: sfau3r42bqbhtz1c6v9hnld67

Is Manager: false

Node Address: 192.168.42.51

Manager Addresses:

192.168.42.126:2377worker 2

Swarm: active

NodeID: m5akhw7j60fru2d0an4lnsgr3

Is Manager: false

Node Address: 192.168.42.251

Manager Addresses:

192.168.42.126:2377

Services

Now it’s time to test our docker swarm by running a container service across our entire fleet!

For testing purposes we chose 6 replicas of an nginx container:

docker@myengine0:~$ docker service create --replicas 6 -p 80:80 --name web nginx

ql6iogo587ibji7e154m7npal

List images

docker@myengine0:~$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nginx <none> db079554b4d2 9 days ago 182 MB

List of services

regarding your docker registry or your internet connection, we will see the replicas running:

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 0/6 nginx:latest

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 2/6 nginx:latest

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 3/6 nginx:latest

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 6/6 nginx:latest

docker@myengine0:~$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

t3v855enecgv web.1 nginx:latest myengine1 Running Running 17 minutes ago

xgwi91plvq00 web.2 nginx:latest myengine2 Running Running 17 minutes ago

0l6h6a0va2fy web.3 nginx:latest myengine0 Running Running 16 minutes ago

qchj744k0e45 web.4 nginx:latest myengine1 Running Running 17 minutes ago

udimh2bokl8k web.5 nginx:latest myengine2 Running Running 17 minutes ago

t50yhhtngbac web.6 nginx:latest myengine0 Running Running 16 minutes ago

Browser

To verify that our replicas are running as they should:

Scaling a service

It’s really interesting that we can scale out or scale down our replicas on the fly !

from the manager

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 6/6 nginx:latest

docker@myengine0:~$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

t3v855enecgv web.1 nginx:latest myengine1 Running Running 3 days ago

xgwi91plvq00 web.2 nginx:latest myengine2 Running Running 3 days ago

0l6h6a0va2fy web.3 nginx:latest myengine0 Running Running 3 days ago

qchj744k0e45 web.4 nginx:latest myengine1 Running Running 3 days ago

udimh2bokl8k web.5 nginx:latest myengine2 Running Running 3 days ago

t50yhhtngbac web.6 nginx:latest myengine0 Running Running 3 days ago

Scale Down

from the manager

$ docker service scale web=3

web scaled to 3

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 3/3 nginx:latest

docker@myengine0:~$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

0l6h6a0va2fy web.3 nginx:latest myengine0 Running Running 3 days ago

qchj744k0e45 web.4 nginx:latest myengine1 Running Running 3 days ago

udimh2bokl8k web.5 nginx:latest myengine2 Running Running 3 days ago

Scale Up

from the manager

docker@myengine0:~$ docker service scale web=8

web scaled to 8

docker@myengine0:~$

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 3/8 nginx:latest

docker@myengine0:~$

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 4/8 nginx:latest

docker@myengine0:~$

docker@myengine0:~$ docker service ls

ID NAME MODE REPLICAS IMAGE

ql6iogo587ib web replicated 8/8 nginx:latest

docker@myengine0:~$

docker@myengine0:~$

docker@myengine0:~$ docker service ps web

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

lyhoyseg8844 web.1 nginx:latest myengine1 Running Running 7 seconds ago

w3j9bhcn9f6e web.2 nginx:latest myengine2 Running Running 8 seconds ago

0l6h6a0va2fy web.3 nginx:latest myengine0 Running Running 3 days ago

qchj744k0e45 web.4 nginx:latest myengine1 Running Running 3 days ago

udimh2bokl8k web.5 nginx:latest myengine2 Running Running 3 days ago

vr8jhbum8tlg web.6 nginx:latest myengine1 Running Running 7 seconds ago

m4jzati4ddpp web.7 nginx:latest myengine2 Running Running 8 seconds ago

7jek2zvuz6fs web.8 nginx:latest myengine0 Running Running 11 seconds ago